The lure of blogging is strong. Having guest-posted about problems with eternal inflation, Tom Banks couldn’t resist coming back for more punishment. Here he tackles a venerable problem: the interpretation of quantum mechanics. Tom argues that the measurement problem in QM becomes a lot easier to understand once we appreciate that even classical mechanics allows for non-commuting observables. In that sense, quantum mechanics is “inevitable”; it’s actually classical physics that is somewhat unusual. If we just take QM seriously as a theory that predicts the probability of different measurement outcomes, all is well.

Tom’s last post was “technical” in the sense that it dug deeply into speculative ideas at the cutting edge of research. This one is technical in a different sense: the concepts are presented at a level that second-year undergraduate physics majors should have no trouble following, but there are explicit equations that might make it rough going for anyone without at least that much background. The translation from LaTeX to WordPress is a bit kludgy; here is a more elegant-looking pdf version if you’d prefer to read that.

—————————————-

Rabbi Eliezer ben Yaakov of Nahariya said in the 6th century, “He who has not said three things to his students, has not conveyed the true essence of quantum mechanics. And these are Probability, Intrinsic Probability, and Peculiar Probability”.

Probability first entered the teachings of men through the work of that dissolute gambler Pascal, who was willing to make a bet on his salvation. It was a way of quantifying our risk of uncertainty. Implicit in Pascal’s thinking, and all who came after him was the idea that there was a certainty, even a predictability, but that we fallible humans may not always have enough data to make the correct predictions. This implicit assumption is completely unnecessary and the mathematical theory of probability makes use of it only through one crucial assumption, which turns out to be wrong in principle but right in practice for many actual events in the real world.

For simplicity, assume that there are only a finite number of things that one can measure, in order to avoid too much math. List the possible measurements as a sequence

![]()

The aN are the quantities being measured and each could have a finite number of values. Then a probability distribution assigns a number P(A) between zero and one to each possible outcome. The sum of the numbers has to add up to one. The so called frequentist interpretation of these numbers is that if we did the same measurement a large number of times, then the fraction of times or frequency with which we’d find a particular result would approach the probability of that result in the limit of an infinite number of trials. It is mathematically rigorous, but only a fantasy in the real world, where we have no idea whether we have an infinite amount of time to do the experiments. The other interpretation, often called Bayesian, is that probability gives a best guess at what the answer will be in any given trial. It tells you how to bet. This is how the concept is used by most working scientists. You do a few experiments and see how the finite distribution of results compares to the probabilities, and then assign a confidence level to the conclusion that a particular theory of the data is correct. Even in flipping a completely fair coin, it’s possible to get a million heads in a row. If that happens, you’re pretty sure the coin is weighted but you can’t know for sure.

Physical theories are often couched in the form of equations for the time evolution of the probability distribution, even in classical physics. One introduces “random forces” into Newton’s equations to “approximate the effect of the deterministic motion of parts of the system we don’t observe”. The classic example is the Brownian motion of particles we see under the microscopic, where we think of the random forces in the equations as coming from collisions with the atoms in the fluid in which the particles are suspended. However, there’s no a priori reason why these equations couldn’t be the fundamental laws of nature. Determinism is a philosophical stance, an hypothesis about the way the world works, which has to be subjected to experiment just like anything else. Anyone who’s listened to a geiger counter will recognize that the microscopic process of decay of radioactive nuclei doesn’t seem very deterministic.

The place where the deterministic hypothesis and the laws of classical logic are put into the theory of probability is through the rule for combining probabilities of independent alternatives. A classic example is shooting particles through a pair of slits. One says, “the particle had to go through slit A or slit B and the probabilities are independent of each other, so,

![]()

It seems so obvious, but it’s wrong, as we’ll see below. The probability sum rule, as the previous equation is called, allows us to define conditional probabilities. This is best understood through the example of hurricane Katrina. The equations used by weather forecasters are probabilistic in nature. Long before Katrina made landfall, they predicted a probability that it would hit either New Orleans or Galveston. These are, more or less, mutually exclusive alternatives. Because these weather probabilities, at least approximately, obey the sum rule, we can conclude that the prediction for what happens after we make the observation of people suffering in the Superdome, doesn’t depend on the fact that Katrina could have hit Galveston. That is, that observation allows us to set the probability that it could have hit Galveston to zero, and re-scale all other probabilities by a common factor so that the probability of hitting New Orleans was one.

Note that if we think of the probability function P(x,t) for the hurricane to hit a point x and time t to be a physical field, then this procedure seems non-local or a-causal. The field changes instantaneously to zero at Galveston as soon as we make a measurement in New Orleans. Furthermore, our procedure “violates the weather equations”. Weather evolution seems to have two kinds of dynamics. The deterministic, local, evolution of P(x,t) given by the equation, and the causality violating projection of the probability of Galveston to zero and rescaling of the probability of New Orleans to one, which is mysteriously caused by the measurement process. Recognizing P to be a probability, rather than a physical field, shows that these objections are silly.

Nothing in this discussion depends on whether we assume the weather equations are the fundamental laws of physics of an intrinsically uncertain world, or come from neglecting certain unmeasured degrees of freedom in a completely deterministic system.

The essence of QM is that it forces us to take an intrinsically probabilistic view of the world, and that it does so by discovering an unavoidable probability theory underlying the mathematics of classical logic. In order to describe this in the simplest possible way, I want to follow Feynman and ask you to think about a single ammonia molecule, NH3. A classical picture of this molecule is a pyramid with the nitrogen at the apex and the three hydrogens forming an equilateral triangle at the base. Let’s imagine a situation in which the only relevant measurement we could make was whether the pyramid was pointing up or down along the z axis. We can ask one question Q, “Is the pyramid pointing up?” and the molecule has two states in which the answer is either yes or no. Following Boole, we can assign these two states the numerical values 1 and 0 for Q, and then the “contrary question” 1 − Q has the opposite truth values. Boole showed that all of the rules of classical logic could be encoded in an algebra of independent questions, satisfying

![]()

where the Kronecker symbol δij = 1 if i = j and 0 otherwise. i,j run from 1 to N, the number of independent questions. We also have ∑Qi = 1, meaning that one and only one of the questions has the answer yes in any state of the system. Our ammonia molecule has only two independent questions, Q and 1 − Q. Let me also define sz = 2Q − 1 = ±1, in the two different states. Computer aficionadas will recognize our two question system as a bit.

We can relate this discussion of logic to our discussion of probability of measurements by introducing observables A = ∑ai Qi , where the ai are real numbers, specifying the value of some measurable quantity in the state where only Qi has the answer yes. A probability distribution is then just a special case ρ = ∑pi Qi, where pi is non-negative for each i and ∑pi = 1.

Restricting attention to our ammonia molecule, we denote the two states as | ±z 〉 and summarize the algebra of questions by the equation

![]()

We say that ” the operator sz acting on the states | ±z 〉 just multiplies them by (the appropriate ) number”. Similarly, if A = a+ Q + a− (1 − Q) then

![]()

The expected value of the observable An in the probability distribution ρ is

![]()

In the last equation we have used the fact that all of our “operators” can be thought of as two by two matrices acting on a two dimensional space of vectors whose basis elements are |±z 〉. The matrices can be multiplied by the usual rules and the trace of a matrix is just the sum of its diagonal elements. Our matrices are

![]()

![]()

![]()

![]()

They’re all diagonal, so it’s easy to multiply them.

So far all we’ve done is rewrite the simple logic of a single bit as a complicated set of matrix equations, but consider the operation of flipping the orientation of the molecule, which for nefarious purposes we’ll call sx,

![]()

This has matrix

![]()

Note that sz2 = sx2 = 1, and sx sz = − sz sx = − i sy , where the last equality is just a definition. This definition implies that sy sa = − sa sy, for a = x or a = z, and it follows that sy2 = 1. You can verify these equations by using matrix multiplication, or by thinking about how the various operations operate on the states (which I think is easier). Now consider for example the quantity B ≡ bx sx + bz sz . Then B2 = bx2 + bz2 , which suggests that B is a quantity which takes on the possible values ±√{b+2 + b−2}. We can calculate

![]()

for any choice of probability distribution. If n = 2k it’s just

![]()

whereas if n = 2k + 1 it’s

![]()

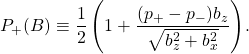

This is exactly the same result we would get if we said that there was a probability P+ (B) for B to take on the value √{bz2 + bx2} and probability P− (B) = 1 − P+ (B), to take on the opposite value, if we choose

The most remarkable thing about this formula is that even when we know the answer to Q with certainty (p+ = 1 or 0), B is still uncertain.

We can repeat this exercise with any linear combination bx sx + by sy + bz sz. We find that in general, if we force one linear combination to be known with certainty, that all linear combinations where the vector (cx, cy, cz) is not parallel to (bx , by, bz) are uncertain. This is the same as the condition guaranteeing that the two linear combinations commute as matrices.

Pursuing the mathematics of this further would lead us into the realm of eigenvalues of Hermitian matrices, complete ortho-normal bases and other esoterica. But the main point to remember is that any system we can think about in terms of classical logic inevitably contains in it an infinite set of variables in addition to the ones we initially thought about as the maximum set of things we thought could be measured. When our original variables are known with certainty, these other variables are uncertain but the mathematics gives us completely determined formulas for their probability distributions.

Another disturbing fact about the mathematical probability theory for non-compatible observables that we’ve discovered, is that it does NOT satisfy the probability sum rule. This is because, once we start thinking about incompatible observables, the notion of either this or that is not well defined. In fact we’ve seen that when we know “definitely for sure” that sz is 1, the probability for B to take on its positive value could be any number between zero and one, depending on the ratio of bz and bx.

Thus QM contains questions that are neither independent nor dependent and the probability sum rule P(sz or B ) = P(sz) + P(B) does not make sense because the word or is undefined for non-commuting operators. As a consequence we cannot apply the conditional probability rule to general QM probability predictions. This appears to cause a problem when we make a measurement that seems to give a definite answer. We’ll explain below that the issue here is the meaning of the word measurement. It means the interaction of the system with macroscopic objects containing many atoms. One can show that conditional probability is a sensible notion, with incredible accuracy, for such objects, and this means that we can interpret QM for such objects as if it were a classical probability theory. The famous “collapse of the wave function” is nothing more than an application of the rules of conditional probability, to macroscopic objects, for which they apply.

The double slit experiment famously discussed in the first chapter of Feynman’s lectures on quantum mechanics, is another example of the failure of the probability sum rule. The question of which slit the particle goes through is one of two alternative histories. In Newton’s equations, a history is determined by an initial position and velocity, but Heisenberg’s famous uncertainty relation is simply the statement that position and velocity are incompatible observables, which don’t commute as matrices, just like sz and sx. So the statement that either one history or another happened does not make sense, because the two histories interfere.

Before leaving our little ammonia molecule, I want to tell you about one more remarkable fact, which has no bearing on the rest of the discussion, but shows the remarkable power of quantum mechanics. Way back at the top of this post, you could have asked me, “what if I wanted to orient the ammonia along the x axis or some other direction”. The answer is that the operator nx sx + ny sy + nz sz, where (nx , ny, nz) is a unit vector, has definite values in precisely those states where the molecule is oriented along this unit vector. The whole quantum formalism of a single bit, is invariant under 3 dimensional rotations. And who would have ever thought of that? (Pauli, that’s who).

The fact that QM was implicit in classical physics was realized a few years after the invention of QM, in the 1930s, by Koopman. Koopman formulated ordinary classical mechanics as a special case of quantum mechanics, and in doing so introduced a whole set of new observables, which do not commute with the (commuting) position and momentum of a particle and are uncertain when the particle’s position and momentum are definitely known. The laws of classical mechanics give rise to equations for the probability distributions for all these other observables. So quantum mechanics is inescapable. The only question is whether nature is described by an evolution equation which leaves a certain complete set of observables certain for all time, and what those observables are in terms of things we actually measure. The answer is that ordinary positions and momenta are NOT simultaneously determined with certainty.

Which raises the question of why it took us so long to notice this, and why it’s so hard for us to think about and accept. The answers to these questions also resolve “the problem of quantum measurement theory”. The answer lies essentially in the definition of a macroscopic object. First of all it means something containing a large number N of microscopic constituents. Let me call them atoms, because that’s what’s relevant for most everyday objects. For even a very tiny piece of matter weighing about a thousandth of a gram, the number N ∼ 1020. There are a few quantum states of the system per atom, let’s say 10 to keep the numbers round. So the system has 101020 states. Now consider the motion of the center of mass of the system. The mass of the system is proportional to N, so Heisenberg’s uncertainty relation tells us that the mutual uncertainty of the position and velocity of the system is of order [1/N]. Most textbooks stop at this point and say this is small and so the center of mass behaves in a classical manner to a good approximation.

In fact, this misses the central point, which is that under most conditions, the system has of order 10N different states, all of which have the same center of mass position and velocity (within the prescribed uncertainty). Furthermore the internal state of the system is changing rapidly on the time scale of the center of mass motion. When we compute the quantum interference terms between two approximately classical states of the center of mass coordinate, we have to take into account that the internal time evolution for those two states is likely to be completely different. The chance that it’s the same is roughly 10−N, the chance that two states picked at random from the huge collection, will be the same. It’s fairly simple to show that the quantum interference terms, which violate the classical probability sum rule for the probabilities of different classical trajectories, are of order 10−N. This means that even if we could see the [1/N] effects of uncertainty in the classical trajectory, we could model them by ordinary classical statistical mechanics, up to corrections of order 10−N.

It’s pretty hard to comprehend how small a number this is. As a decimal, it’s a decimal point followed by 100 billion billion zeros and then a one. The current age of the universe is less than a billion billion seconds. So if you wrote one zero every hundredth of a second you couldn’t write this number in the entire age of the universe. More relevant is the fact that in order to observe the quantum interference effects on the center of mass motion, we would have to do an experiment over a time period of order 10N. I haven’t written the units of time. The smallest unit of time is defined by Newton’s constant, Planck’s constant and the speed of light. It’s 10− 44 seconds. The age of the universe is about 1061 of these Planck units. The difference between measuring the time in Planck times or ages of the universe is a shift from N = 1020 to N = 1020 − 60, and is completely in the noise of these estimates. Moreover, the quantum interference experiment we’re proposing would have to keep the system completely isolated from the rest of the universe for these incredible lengths of time. Any coupling to the outside effectively increases the size of N by huge amounts.

Thus, for all purposes, even those of principle, we can treat quantum probabilities for even mildly macroscopic variables, as if they were classical, and apply the rules of conditional probability. This is all we are doing when we “collapse the wave function” in a way that seems (to the untutored) to violate causality and the Schrodinger equation. The general line of reasoning outlined above is called the theory of decoherence. All physicists find it acceptable as an explanation of the reason for the practical success of classical mechanics for macroscopic objects. Some physicists find it inadequate as an explanation of the philosophical “paradoxes” of QM. I believe this is mostly due to their desire to avoid the notion of intrinsic probability, and attribute physical reality to the Schrodinger wave function. Curiously many of these people think that they are following in the footsteps of Einstein’s objections to QM. I am not a historian of science but my cursory reading of the evidence suggests that Einstein understood completely that there were no paradoxes in QM if the wave function was thought of merely as a device for computing probability. He objected to the contention of some in the Copehagen crowd that the wave function was real and satisfied a deterministic equation and tried to show that that interpretation violated the principles of causality. It does, but the statistical treatment is the right one. Einstein was wrong only in insisting that God doesn’t play dice.

Once we have understood these general arguments, both quantum measurement theory and our intuitive unease with QM are clarified. A measurement in QM is, as first proposed by von Neumann, simply the correlation of some microscopic observable, like the orientation of an ammonia molecule, with a macro-observable like a pointer on a dial. This can easily be achieved by normal unitary evolution. Once this correlation is made, quantum interference effects in further observation of the dial are exponentially suppressed, we can use the conditional probability rule, and all the mystery is removed.

It’s even easier to understand why humans don’t “get” QM. Our brains evolved according to selection pressures that involved only macroscopic objects like fruit, tigers and trees. We didn’t have to develop neural circuitry that had an intuitive feel for quantum interference phenomena, because there was no evolutionary advantage to doing so. Freeman Dyson once said that the book of the world might be written in Jabberwocky, a language that human beings were incapable of understanding. QM is not as bad as that. We CAN understand the language if we’re willing to do the math, and if we’re willing to put aside our intuitions about how the world must be, in the same way that we understand that our intuitions about how velocities add are only an approximation to the correct rules given by the Lorentz group. QM is worse, I think, because it says that logic, which our minds grasp as the basic, correct formulation of rules of thought, is wrong. This is why I’ve emphasized that once you formulate logic mathematically, QM is an obvious and inevitable consequence. Systems that obey the rules of ordinary logic are special QM systems where a particular choice among the infinite number of complementary QM observables remains sharp for all times, and we insist that those are the only variables we can measure. Viewed in this way, classical physics looks like a sleazy way of dodging the general rules. It achieves a more profound status only because it also emerges as an exponentially good approximation to the behavior of systems with a large number of constituents.

To summarize: All of the so-called non-locality and philosophical mystery of QM is really shared with any probabilistic system of equations and collapse of the wave function is nothing more than application of the conventional rule of conditional probabilities. It is a mistake to think of the wave function as a physical field, like the electromagnetic field. The peculiarity of QM lies in the fact that QM probabilities are intrinsic and not attributable to insufficiently precise measurement, and the fact that they do not obey the law of conditional probabilities. That law is based on the classical logical postulate of the law of the excluded middle. If something is definitely true, then all other independent questions are definitely false. We’ve seen that the mathematical framework for classical logic shows this principle to be erroneous. Even when we’ve specified the state of a system completely, by answering yes or no to every possible question in a compatible set, there are an infinite number of other questions one can ask of the same system, whose answer is only known probabilistically. The formalism predicts a very definite probability distribution for all of these other questions.

Many colleagues who understand everything I’ve said at least as well as I do, are still uncomfortable with the use of probability in fundamental equations. As far as I can tell, this unease comes from two different sources. The first is that the notion of “expectation” seems to imply an expecter, and most physicists are reluctant to put intelligent life forms into the definition of the basic laws of physics. We think of life as an emergent phenomenon, which can’t exist at the level of the microscopic equations. Certainly, our current picture of the very early universe precludes the existence of any form of organized life at that time, simply from considerations of thermodynamic equilibrium.

The frequentist approach to probability is an attempt to get around this. However, its insistence on infinite limits makes it vulnerable to the question about what one concludes about a coin that’s come up heads a million times. We know that’s a possible outcome even if the coin and the flipper are completely honest. Modern experimental physics deals with this problem every day both for intrinsically QM probabilities and those that arise from ordinary random and systematic fluctuations in the detector. The solution is not to claim that any result of measurement is definitely conclusive, but merely to assign a confidence level to each result. Human beings decide when the confidence level is high enough that we “believe” the result, and we keep an open mind about the possibility of coming to a different conclusion with more work. It may not be completely satisfactory from a philosophical point of view, but it seems to work pretty well.

The other kind of professional dissatisfaction with probability is, I think, rooted in Einstein’s prejudice that God doesn’t play dice. With all due respect, I think this is just a prejudice. In the 18th century, certain theoretical physicists conceived the idea that one could, in principle, measure everything there was to know about the universe at some fixed time, and then predict the future. This was wild hubris. Why should it be true? It’s remarkable that this idea worked as well as it did. When certain phenomena appeared to be random, we attributed that to the failure to make measurements that were complete and precise enough at the initial time. This led to the development of statistical mechanics, which was also wildly successful. Nonetheless, there was no real verification of the Laplacian principle of complete predictability. Indeed, when one enquires into the basic physics behind much of classical statistical mechanics one finds that some of the randomness invoked in that theory has a quantum mechanical origin. It arises after all from the motion of individual atoms. It’s no surprise that the first hints that classical mechanics was wrong came from failures of classical statistical mechanics like the Gibbs paradox of the entropy of mixing, and the black body radiation laws.

It seems to me that the introduction of basic randomness into the equations of physics is philosophically unobjectionable, especially once one has understood the inevitability of QM. And to those who find it objectionable all I can say is “It is what it is”. There isn’t anymore. All one must do is account for the successes of the apparently deterministic formalism of classical mechanics when applied to macroscopic bodies, and the theory of decoherence supplies that account.

Perhaps the most important lesson for physicists in all of this is not to mistake our equations for the world. Our equations are an algorithm for making predictions about the world and it turns out that those predictions can only be statistical. That this is so is demonstrated by the simple observation of a

Geiger counter and by the demonstration by Bell and others that the statistical predictions of QM cannot be reproduced by a more classical statistical theory with hidden variables, unless we allow for grossly non-local interactions. Some investigators into the foundations of QM have concluded that we should expect to find evidence for this non-locality, or that QM has to be modified in some fundamental way. I think the evidence all goes in the other direction: QM is exactly correct and inevitable and “there are more things in heaven and earth than are conceived of in our naive classical philosophy”. Of course, Hamlet was talking about ghosts…

Carl,

I don’t think you’re right about the weather equations re: Katrina. If I take the initial conditions way back at the time when it wasn’t clear if it would hit N.O. or Galveston then the probability for hitting Galveston doesn’t go to zero at the time it hits Katrina. What happens in actual weather forecasts is that we continually make new measurements and apply the condition probability rule to throw away those parts of the old predictions that said things that observation has proven wrong. In this way we get a distribution, which gradually zeroes in on N. O. . But if we take only the very early data, and don’t make any other observation until we ask which city got hit, then we have to make a sudden “non-local” contraction of the distribution from one that predicted a reasonable chance for both cities to be hit to one that predicts only N.O.

To those of you who believe in a mystical “Reality” that does not consist in observation of macroscopic objects, then me illustrate briefly the concept of “unhappening”.

The name is perhaps due to Susskind and Bousso, but I first heard the idea from Charles Bennett. Let’s think about writing the letter S on a piece of paper. We can say that “happened” and the letter S is “real”. Now, take a rock made out of anti-matter and (very quickly if you don’t want to burn your hand 🙂 ) wrap the paper around it, as in the well known game. Observers far away see a big explosion, but more importantly, according to the laws of QM, all the information that we previously considered to be “the reality of the letter S on the piece of paper” is encoded in the quantum phase correlations between the states of the photons (for simplicity I’m

assuming that the annihilation products are all photons) that are rushing out into space at the speed of light. The QM wave function of the paper has “undecohered” (I’m deliberately using an ugly phrase here) and the “reality of the letter S” is now a property of spooky correlations between photons in very different places.

So I would claim that our intuitive notion of reality and of things definitely happening is based on our brains’ lack of experience with microphysical problems.

Here’s my suggestion for how SOMEONE might come to a more intuitive understanding of QM than our own. Imagine the dreams of the quantum computing aficionadas, and those of the AI community are both realized, and we manage to build a quantum computer, which becomes self aware. Since its brain, unlike our own, uses quantum processes for computation, it MIGHT have some kind of “intuition” about QM that we can’t achieve. Imagine that we ask it to explain things to us, and get the answer, “It would be like trying to teach calculus to a dog”….

#23 Scott: You are correct as far as something as complex as a brain goes.

The suggestion I was clumsily trying for was that most (?) forms of objective collapse don’t give a preferred status to a conscious observer as a measurement (and those that do have even bigger issues…). Even as a thought experiment, this exercise forces them to face the question of what constitutes a “measurement” that causes “collapse”: 1000 atoms? 100? 10? As a thought experiment it is the natural extension of Schrodinger’s cat. As a practical experiment, it would be nice to show that collapse is simply a bad assumption.

I’ve seen some very clever attempts to rescue collapse: one recently is the suggestion that collapse can happen spontaneously and randomly to any particle in a system, and then ripples through the rest of the system; the more particles you have, the sooner one of them will collapse. This is very clever… except that it begins from the assumption that there is such a thing as collapse that needs explaining.

#26 Yes, your description is correct insofar as it applies to our knowledge of Katrina. My point is that as an analogy for how QM works, it’s confusing, because we know that the probability of hitting Galveston doesn’t suddenly collapse to zero, because in the macroscopic world there are inevitably other observers that we are ignoring for the sake of the analogy. Does that make more sense?

As for the rest of your post, yes, I agree entirely. There is no reason to expect that we should be equipped to intuitively understand QM. The best we might be able to hope for is to understand a mathematical description of how it works. To extend your AI example, I recall reading (and I wish I could attribute this properly) a suggestion that it might be possible to put such a computer into a superposition of states for a period of time, and potentially be able to preserve the AI’s knowledge that it had been in such a state. You could then ask it how it “feels” to be in a superposition… but again, there is no guarantee you’d get back an answer that we could understand.

Tom Banks has given a nice discussion of probability and quantum mechanics (QM) but seems to have left open the question as to whether the quantum state (say in conjunction with some set of rules for how to interpret it) fully specifies our reality or not. One view is that QM assigns probabilities to mutually exclusive outcomes but that only one of the outcomes actually occurs, so the full specification of reality, including which outcome actually occurs, is not a consequence purely of the quantum state and of some set of rules. This view would seem to agree at least partially with what Einstein wrote in a 1945 letter to P. S. Epstein (quoted in M. F. Pusey, J. Barrett, and T. Rudolph, http://arxiv.org/abs/1111.3328, which Matt Leifer mentioned in the first response to Tom’s latest guest blog): “I incline to the opinion that the wave function does not (completely) describe what is real, but only a (to us) empirically accessible maximal knowledge regarding that which really exists … This is what I mean when I advance the view that quantum mechanics gives an incomplete description of the real state of affairs.”

An alternative view, which I personally find more attractive, is that the quantum state (in conjunction with some rules) does fully specify the reality of our universe or multiverse (leaving aside God and other entities which He may have also created). In this case the probabilities the rules give from the quantum state must not be merely something like propensities for potentialities to be converted to actualities, with the question of which actuality in reality occurs not being fully specified by the quantum state. Rather, the quantum probabilities may be measures for multiple existing actualities, something like frequencies (but not necessarily restricted to be ratios of integers, i.e., to rational numbers).

A broad framework in which this second viewpoint occurs is the many-worlds version of quantum theory, in which the quantum state never collapses but rather describes many actually existing Everett `worlds’ (each just part of the total reality, so that these are not the `worlds’ of philosophical discourse that denote possible states of affairs for the totality of reality). If one views the Everett worlds as making up a continuum with a normalized measure, so that there are an uncountably infinite number of worlds for each different outcome, then one can say that the quantum probability for a specific outcome is the fraction of the measure of the continuum with that outcome. This then allows the quantum probabilities to be interpreted rather as frequencies (though not necessarily as rational numbers) in an infinite ensemble of Everett worlds. This viewpoint would thus be a generalization of the frequentist view that Tom mentioned to the case in which the quantum theory provides its own infinite ensemble (the continuum of Everett many worlds), rather than requiring that “if we did the same measurement a large number of times, then the fraction of times or frequency with which we’d find a particular result would approach the probability of that result in the limit of an infinite number of trials,” as Tom nicely explained the more restricted interpretation of the frequentist view.

Now I am NOT saying that the quantum state by itself logically implies the probabilities, or the measures for the continua of Everett many worlds in that framework. One could imagine a bare quantum framework, such as what the late Sidney Coleman advocated, in which there are just amplitudes and expectation values but no probabilities. However, to test theories against observations, it seems very useful to be able to hypothesize that there are probabilities for observations. Then we need rules for extracting these probabilities from the quantum state. In quantum cosmology, this is what I regard as the essence of the measure problem (which thus seems to me to be related to the measurement problem of quantum mechanics). I have shown in Insufficiency of the Quantum State for Deducing Observational Probabilities, The Born Rule Dies (published in Journal of Cosmology and Astroparticle Physics, JCAP07(2009)008, at http://iopscience.iop.org/1475-7516/2009/07/008/, as “The Born Rule Fails in Cosmology”), Born Again, and Born’s Rule Is Insufficient in a Large Universe that in a universe large enough for there to be copies of observations, the probabilities for observations cannot be expectation values of projection operators (a simple mathematization of Born’s rule that was stated more vaguely in Max Born’s Nobel-Prize winning paper). It is not yet clear what the replacement for Born’s rule should be. To me, the most conservative approach would be to say that the relative probability for each possible observation is the expectation value of some quantum operator associated with the corresponding observation, but what these operators are remains unknown.

It used to be assumed that the complete set of dynamical laws for the universe (e.g., the full set of quantum operators and their algebra in a quantum framework) is the Theory of Everything, but, particularly with the brilliant work of James Hartle and Stephen Hawking in their proposal for the Wave Function of the Universe, published in Physical Review D 28, 2960-2975 (1983), available at http://prd.aps.org/abstract/PRD/v28/i12/p2960_1 (even if perhaps not quite right in detail; see Susskind’s challenge to the Hartle-Hawking no-boundary proposal and possible resolutions), it has been recognized that one also needs the quantum state. Now with the demonstrated failure of Born’s rule and the measure problem of cosmology, we realize that one also needs as-yet-unknown rules for extracting the probabilities of observations from the quantum state. However, using the words of Richard Feynman to me in 1986 about his former Ph.D. advisor John Wheeler on another issue, the “radical conservative” viewpoint on this issue is that the quantum state and the rules for extracting the probabilities of observations (interpreted as normalized measures for many actually existing observations) is a complete specification of the reality of our universe or multiverse.

I just happened to be looking through our department’s QM course website and found a slogan from my colleague Scott Thomas that crystallizes what I was trying to say about Reality:

OBJECTIVE REALITY IS AN EMERGENT PHENOMENON

Tom, re. #26

It would be very helpful if you could clarify what you intended with the example of the paper. You write ‘To those of you who believe in a mystical “Reality” that does not consist in observation of macroscopic objects, then me illustrate briefly the concept of “unhappening”.’ Why the word “mystical” here? I believe that the overwhelming majority of the physical world is not macroscopic and/or not observed. For example: the precise details of the convection in the Sun right now, the distribution of dark matter in the Milky way, the present weather patterns on the far side of Neptune, everything that happened in the first few nanoseconds after the Big Bang, the geometry of space-time at Planck scale. I believe that Socrates had a particular blood type, even though no ever did or ever will observe what it was. This is not “mystical” reality, it is physical reality: the very thing that physics is about.

Now somehow, the fact that one can destroy a piece of paper is supposed to cast doubt on such reality. How can that be? If you wrote an S on the piece of paper, then of course one can say it “happened” and was “real”: that says no more nor less than that you wrote an S on the paper. If we destroy the paper with anti-matter (or just burn it), it may become practically impossible for distant observers, or historians, to tell what was written on it. So what? If the laws of physics are deterministic then the “information” about what was on the paper exists in principle at all times (more accurately: on every Cauchy surface) because the physical state on each such surface together with the laws imply the complete history of the universe. If the dynamical laws are not deterministic (as you seem to accept), then the “information” gets lost. But nonetheless, you wrote an S on the paper, whether distant observers or future historians can tell. What can this possibly have to do with the idea that “things definitely happen”. Lots of things—most things—that happen are never observed or known. If we did not believe that there were such things, what would we be building expensive telescopes for? There is a world out there that exists independently of being observed, and it is that world that physics is trying to understand.

#26: Your unhappening example is bad as it is. Memory traces in the brain of the S writer provide the needed environmental decoherence even after the paper had been annihilated. But that is really easy to correct; take a larger lump of antimatter and annihilate the writer as well. 🙂

#31: According to some interpretations of quantum mechanics, maybe Socrates had a superposition of blood types and that superposition never collapsed… (Then again, maybe Socrates was a fictitious character invented by Plato.)

A quantum formulation of a classical system will have classical superselection sectors, with one corresponding to each classical configuration. Any operators invented which mix superselection sectors is unphysical and should not be counted.

Henry #34

Our job here is to figure out which interpretations of quantum mechanics are coherent or should be taken seriously. Do you think that such an interpretation, if it exists, should be a topic of serious discussion?

Will #33

Superselection at best does the same job as decoherence…which is to say, it does not solve the measurement problem.

So do people agree with Tom that entanglement is in no way non-local and is actually just a trivial ‘readout’ of a result that was fixed as soon as the entangled particles were projected in different directions?

ursus_maritimus #36 He doesn’t say the result was fixed he says the correlation was fixed at the time the entangled particles were created. There is no counterfactual definiteness here, the particles are in an up/down or down/up state but we can not know even in principle until a measurement is made on one of the particles. This is not the same as the naive Bertlmann socks situation where a counterfactually definite state of the socks exists before anyone looks at either of them

Tim Maudlin #34: I’m curious, which interpretations (if any) have you found coherent or worth taking seriously?

(Personally, I’ve found pretty much every interpretation’s criticisms of the other interpretations to be extremely persuasive and cogent. 🙂 )

The other interpretation, often called Bayesian, is that probability gives a best guess at what the answer will be in any given trial.

How do we test the claim of “best”? Can we do it without getting frequentist?

I don’t have much useful to contribute, but I can at least go on record with my current beliefs. I’m one of those barbarians who thinks the wave function is “real,” in any useful sense of the word “real.” And that all it does is to evolve unitarily via the Schrodinger equation. (The point of view that has been saddled with the label “many-worlds.”) Our job is to establish a convincing interpretation, i.e. to map the formalism of unitary QM onto the observed world. There are certainly difficulties here, and I’ll confess that I don’t really see how treating the wave function simply as a rule for predicting probabilities helps to solve them. (In particular, as Tim emphasizes, it seems to leave hanging the old Copenhagen problem of when wave functions collapse, which in this interpretation becomes “when we have made an observation over which we can conditionalize future predictions.”)

One problem is why we observe certain states (live cat, dead cat) and not others (superposition of live cat + dead cat). Here I strongly think that a combination of decoherence plus the actual Hamiltonian of the world (in particular, locality in space) will provide an acceptable answer, even if I don’t think I could personally put all the pieces together. I’m more impressed by the problem in MWI about how to get out the Born rule for probabilities, which Tom seems to simply take as “what the wave function does.” I can’t swear that this attitude is somehow illegitimate, although I’d certainly like to do better.

I thought the discussion of non-commuting observables in classical mechanics was actually the most interesting part of the post.

Tim

I definitely believe in “a world out there”, but I think that our brains are incapable of coming to an intuitive understanding of it, by which I mean one which will satisfy your notion of Reality in which a thing either exists or doesn’t. The essence of QM is that even when every possible definite thing, which can be known at a given time, is known, then there are things which are uncertain. That’s what the math says. And we know that manipulating those mathematical rules and interpreting the things that look like probability distributions as probability distributions, we make predictions and give explanations about the micro-world which are in exquisite agreement with experiment. And “local realist” alternative explanations, as we all know, cannot agree with those experiments.

I actually think the first part of my post is much more interesting than this retread of all the discussions of whether decoherence does or does not solve the measurement problem. It seems to me significant that you cannot write down the mathematical formulation of classical logic, which is the mode of thought motivated by the realist position, without automatically raising all of the issues of QM. They’re there in the math and the world uses them.

For me, the philosophical conclusion from all of this is the one summarized in Scott Thomas’ slogan. Everything for which we can affirm the ideas of “reality” by experiment, is one of these big composite objects for which QM predictions are indistinguishable from those of classical statistics obeying the conditional probability rule. My conclusion from this is that because our brains evolved only to deal with such objects, we think of Objective Reality in the wrong way. The real rules of the world just won’t be fit in to that straitjacket. And if we can’t come to a satisfactory intuitive understanding of them, that’s our defect, not a defect of the rules. There’s no principle that guarantees that we can understand the microscopic rules of the world. Indeed, if one believes in the evolutionary story of the origin of our consciousness (I call it only a story, not because I disagree with the overwhelming evidence for evolution, but because I think we don’t understand consciousness well enough to know if that story is not missing some important ingredient), then it seems incredible that we understand as much as we do.

By the way, you may not know this, but one of the predictions of a universe with a positive cosmological constant, as ours seems to have, is that the Sombrero galaxy will eventually pass out of our cosmological horizon, never to be seen again (actually, just like an object falling into a black hole, we will never see it leave the horizon, but the signals from it will be redshifted into oblivion. According to the Holographic Principle and the Principle of Black Hole Complementarity, this is to be interpreted, from our point of view, as the galaxy being absorbed into a huge thermal system living on the horizon. On the other hand, from the Sombrerinos point of view, they go on their merry way and WE are absorbed into a thermal system on their horizon. The two points of view are Complementary, meaning that macroscopic observations by the Sombrerinos do not commute with our own. It’s perfectly consistent, because we’re using different notions of time, and any experiment we can do, which would bring back data proving that they actually burned up, has the effect of burning them up. These theoretical ideas have not yet

been subjected to experimental test, but at least my version of them leads to experimental consequences, which are probably falsifiable by the LHC.

This may help you understand why I think Objective Reality is overrated…

It seems that the upshot of this post is that quantum mechanics is what you get when you have some observable things in a system that don’t commute- i.e. it matters in which order you measure them, or something along those lines. From this perspective, the problem is shifted to asking about which things have this property of having non-commuting observables. We already know that non-relativistic quantum mechanics isn’t an exact framework to describe everything that happens in the universe (consider relativity, for example). So this somehow says- there are things out there with non-commuting observables. And quantum mechanics is the logical framework to describe such things.

It’d be interesting to try to formalize the example of the hurricane, or something like it, and to see what all the odd things about quantum mechanics would say in that case. And if there are such examples- (maybe from finance, or other fields as well?) then perhaps by building up a repertoire of such examples we could approach an intuitive understanding of quantum mechanics.

Scott # 37

Bell himself promoted both the Bohmian approach and the GRW collapse approach as theories where all of the physics is in the equations (rather than in some vague surrounding talk) that can demonstrably recover all verified predictions of non-relativistic QM. They both postulate a physical world that evolves irrespective of “measurement” or “observation”. There are extensions of both to cover field theory/particle creation and annihilation. These are theories that provide clear, exact answers to any clearly stated physical question. I would be happy to consider any theory that is equally precisely formulated. I have problems with Many Worlds concerning the interpretation of probability, and connection to experimental outcomes.

No doubt, all of these theories have unpleasant aspects. But the are clear and consistent, and remind you what what a clear physics can be. And they are ongoing projects, being expanded and refined.

#37 James

Then how does the entangled particle come to ‘know’ what state to be in (up or down) ‘instantaneously’ once the observer makes the measurement on its partner that allows us to know in principle?

Professor Hillel is said to have been asked by a student to explain his Quantum Physics 101 textbook while standing on one foot. Hillel, standing on one foot, replied to the student “Quantum Theory basically explains that some things cannot be measured precisely and thus nothing is certain; the rest of the book is just commentary; now go home and do your homework problems.”

Wow!! What a great discussion.

Tom # 41;

Thanks for replying, but I’m afraid I still don’t see the point of the example with the note. If you destroy the note, and the physics is deterministic, then the “information” about what was on the note still exists but is, as it were, widely scattered. Why think that undermines any interesting physical view?

Classical mechanics, of course, is not formulated in terms of what anyone knows. The complete physical state of a system is specified by a point in phase space, and under the usual Hamiltonian laws of motion that phase point evolves deterministically. Given that information, and the exact physical specification of any possible physical interaction of this system with another, the physics yields a perfectly determinate outcome. So there is no intrinsic “uncertainty” or “probability” in classical physics with respect to the outcome of any exactly specified physical situation. One could, in principle, ask whether there are physical limits that arise in classical mechanics concerning how much one could know about a system, but nothing in your post suggests such limits. Meaningful physical questions are about the outcomes of experiments, not about abstract “observables” cooked up in the math. And in classical physics, if you know all that can be known about a system (the exact phase point) and all that can be known about the experimental situation (the exact phase point), then there will be a unique, exact prediction of the outcome of the experiment. If you disagree with this, please describe a physical experiment in a classical setting where it fails.

#41

Well if the most talked about preprint on the internet is to be taken as true, belief that the wave function is real and not a handy statistical abstraction will soon hardly be thought of as ‘barbarian’.

ursus_maritimus #44

Ay, there’s the rub. 🙂

We don’t know how, we just know that this is the way nature works as has been demonstrated in many experiments by Clauser, Aspect, Zeilinger et al.

Of course, we can make suggestions, but if your suggestion requires both locality and counterfactual definiteness to be true then it does not describe nature.

Nature doesn’t really care if us humans find her difficult to comprehend

“I’m one of those barbarians who thinks the wave function is “real,” in any useful sense of the word “real.” And that all it does is to evolve unitarily via the Schrodinger equation. (The point of view that has been saddled with the label “many-worlds.”) Our job is to establish a convincing interpretation, i.e. to map the formalism of unitary QM onto the observed world.”

So, barbarian, where can I buy a wave function / probability meter? 😉 Although I’d agree with Tom in that I think Jaynes was wrong to assert – as he did – that there *must* be some discernible ‘reality’ underlying QM in order to avoid the “probability / wave function is ‘real’” mind projection fallacy, mind projection fallacy it surely is. There are at least as many ways (Aerts, Rovelli, De Muynck…) out of that rabbit hole as there are out of the “FTL neutrino would destroy SR and causality” rabbit hole.