What is the mind, and what does it try to do? An overly simplified materialist view might be that the mind emerges from physical processes in the brain. But you can be a materialist and still recognize that there is more to the mind than just the brain: the rest of our bodies play a role, and arguably we should count physical artifacts that contribute to our memory and cognition as part of "the mind." Or so argues today's guest, philosopher/cognitive scientist Andy Clark. As to what the mind does, it tries to predict what happens next. This simple idea provides a powerful lens through which to interpret all the different things our minds do, including the idea that "perception is controlled hallucination."

Support Mindscape on Patreon.

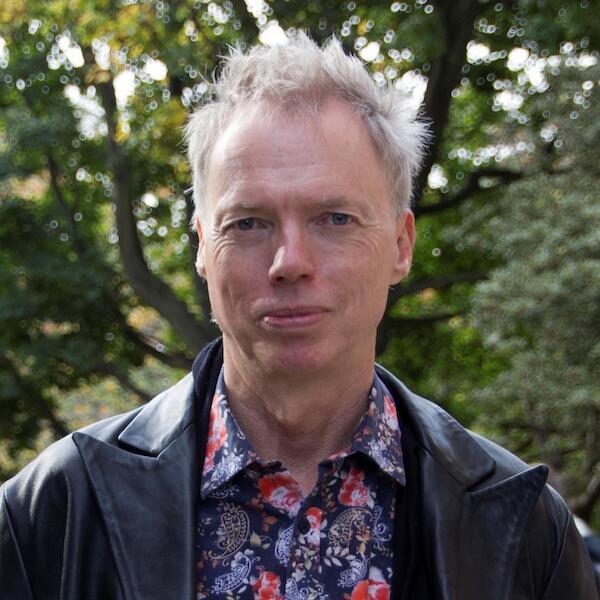

Andy Clark received his Ph.D. in philosophy from the University of Sussex. He is currently Professor of Cognitive Philosophy at Sussex. He was Director of the Philosophy/Neuroscience/Psychology Program at Washington University in St Louis, and Director of the Cogntive Science Program at Indiana University. His new book is The Experience Machine: How Our Minds Predict and Shape Reality.

0:00:00.0 Sean Carroll: Hello everyone, welcome to the Mindscape Podcast. I'm your host, Sean Carroll. When you think about how we conceptualize human beings, someone once pointed out that we're always using metaphors that depend on our current best technologies. You know, when clocks were just invented, wristwatches and so forth, it was a clockwork universe. When robots and machines came on the scene, we thought of organic beings kind of like that. And now we have computers, besides which we have cameras and video cameras and audio recorders and so forth. So we tend very very roughly, you know, we tend to think about a person as kind of like a robot with some video cameras for eyes and audio recorders for ears hooked up to a computer inside and the sensory apparatus brings information into the computer, which then tells the robot body what to do. It's a simple, kind of straightforward, compelling picture. It's also wrong. That's not actually a very good description of what we are, how we behave. For one thing, intelligent design is not the way that human beings came about. We evolved over many, many years, and we weren't aiming for that.

0:01:17.6 SC: You have to think about what is the kind of architecture that actually best serves the purposes of surviving and procreating and reproductive fitness and so forth. And it turns out to be very different. So today's guest is Andy Clark, who is a philosopher and a cognitive scientist. In fact, his title at the University of Sussex is Professor of Cognitive Philosophy, very well known in philosophy, very, very highly cited for thinking about the brain and the mind and how they're related and how they work. He became very famous with a co-author paper with David Chalmers where they proposed the extended mind hypothesis, the idea that what you should count as your mind is not just your brain, but also all the little extensions of the brain that help us think, whether it's inside our bodies or whether it is things we scribble down on a piece of paper or use to enhance our memories or calculational abilities and so forth and so on. He also has a great interest in the idea of the brain as a predictive machine, and that is the subject of his new book, The Experience Machine, How Our Minds Predict and Shape Reality.

0:02:26.7 SC: So the idea here is that the brain is not a computer just bringing in sense data and then thinking about it. Our brains are constantly constructing a set of predictions for what's going to happen next. What is going to be the situation in which the body finds itself? What is the sensory data that we're going to bring in? And then you compare what you're actually experiencing versus what the brain was predicting and you try to play the game of minimizing the error between what you predicted and what you were actually perceiving. This sounds like maybe a small change of emphasis or an angle and a similar kind of process rather than the brain just being a passive receptor of information. It is sort of actively engaged in a feedback loop, but it has very very significant consequences for how we think about thinking, how we think about fixing thinking, right? When we go wrong one way or the other, whether it's, you know, being in pain or having a mental disorder of some sort, how do we get better at it? Taking seriously how the brain is a prediction machine is very useful here, as well as for philosophical problems about how you carve up nature, how you think about what the brain does, what is consciousness, what is free will, and so forth.

0:03:38.6 SC: So this is one of those podcasts that touches on many issues that we're interested in here at Mindscape, from consciousness to time, all over the place. And occasional reminders, you can support Mindscape by pledging at Patreon. Go to patreon.com/Seanmcarroll, and pledge a dollar or two or whatever you like for each episode, in return sense of fulfillment, but of course also ad-free versions of the podcast, as well as the ability to ask questions for the Ask Me Anything episodes. You can also, if you're interested, leave reviews of Mindscape at Apple Podcasts or wherever there are reviews. Those reviews help draw other listeners in. So if you think Mindscape is worth listening to, make it an even bigger community listening, and that would be awesome for all of us concerned. So with that, let's go.

[music]

0:04:42.7 SC: Andy Clark, welcome to the Mindscape Podcast.

0:04:44.3 Andy Clark: Hey, it's great to be here. Thanks for having me.

0:04:46.7 SC: You know, you've done, as many people have of a certain age, many interesting things over your career. Your new book is the prediction... What is the new book? [chuckle] What is the title?

0:04:58.4 AC: It's The Experience Machine.

0:05:00.7 SC: The Experience Machine. I keep wanting to say the Prediction Machine 'cause obviously prediction is playing a big role there.

0:05:06.1 AC: Experience Machine with how predictions shape and build reality.

0:05:11.4 SC: Good, good. Yeah, reality will be a theme that we want to get to. But I can't give up the opportunity to also talk about extended mind and extended cognition and things like that. So I thought it would make sense to talk extended mind first and then get into prediction. Does that make sense logically to you?

0:05:29.4 AC: I think that's a good route. That's... Certainly that was my route, so why not?

0:05:31.7 SC: Good, good. Let's do it. So apparently there are people out there who think that, well, there are people who think that the mind is not even related to the brain, which is funny to me, but there are other people like yourself perhaps who think that the brain is just one little part of our minds and our thinking. So I'm not going to put words into your mouth. What does it mean to talk about the extended mind?

0:05:56.7 AC: Yeah. I mean, this is a view that I've kind of held and defended for many years. It goes back to a piece of work that I did with David Chalmers. He's famous for his kind of almost dualistic views on consciousness after all, many years ago, back in 1998. The basic idea that Chalmers and I agreed on is that when it comes to unconscious cognition, then there's no reason to think that the brain is the limit of the machinery that can count as part of an individual's cognitive processing, where that has to include unconscious processing because it's unconscious processing that we think mostly is what gets extended. There's a whole other debate to have about conscious processing. And the idea there is that moment by moment the brain doesn't really care where information is stored, it cares about what information can be accessed, how fluidly you can get at it, whether or not you've got some idea that it's there to be got at at all. So the idea was that calls to biological memory and calls to external stores like a notebook or currently nowadays maybe a smartphone or something like that are working in fundamentally the same kind of way.

0:07:16.7 AC: And actually, interestingly, it's that that I think the predictive processing story ends up cashing out in an interesting way. So we can circle back around to that later on maybe.

0:07:28.0 SC: Great.

0:07:29.7 AC: But that's the core idea is that, you know, the machinery of mind doesn't all have to be in the head.

0:07:36.8 SC: David Chalmers, of course, another former Mindscape podcast guest. So we have an illustrious alumni base. But let's make it a little bit more concrete. What do we mean? You know, I think that what immediately comes to mind is I can remember almost no phone numbers now because they're all in my smartphone. Does that count as extended mind?

0:07:55.5 AC: That counts. I think it's after all, you have a fluent ability to access at least a functional equivalent of that information as and when you need it because you just pick up the phone and there it goes. If on the other hand you had all those numbers stored in a notebook but the notebook was in your basement and you had to run down into the basement to get it whenever you needed it, that wouldn't count because you wouldn't have the constant robust availability of that resource woven into the way that you go about every kind of problem that daily life throws at you. So there is, I think, a genuine intuition, which is that whatever the machinery of mind is, it better be more or less portable, it better more or less kind of be going around the world where you're going around the world, or where bio you, I should say, is going around the world. And so for that reason, I think you need robust and trusted kinds of access, but only to the extent that biological stuff is robust and trusted. You know, now and again my biological memory goes down, now and again I don't trust what it throws at me. But it should be more or less in the same ballpark.

0:09:10.2 SC: [chuckle] Well, okay, so robust and trusted access. Is that the criterion? I mean, I guess an immediate question that comes to mind is where do I draw the boundary? Does everything in the world count as my mind?

0:09:24.4 AC: Yeah, I mean, it's one of the original worries about this view. It's one that we used to call, or I used to call cognitive bloat. Worry that somehow there's no good way of stopping this process. But actually, if you take that robust availability and trust of the product stuff reasonably seriously, that does rule an awful lot of things out. But I think in addition, there's something which I've never given a full account of to be honest but there's something about the sort of delicate temporal nature of the integration of the biological system with this non-biological proper aid or resource or whatever it is so that you can have the brain if you like making calls to this stuff in ways where it kind of knows about the temporality of that, it doesn't all have to go through a little bottleneck of attention, you don't have to kind of think to yourself, "Oh, I'd better get that information from over there."

0:10:20.6 AC: That's not what it feels like to go about your daily things as you. So it's this sort of idea of something where if it were taken away suddenly you would feel very much at a loss, a sort of kind of general purpose loss. There'll be many situations that you'd find yourself in where you were no longer able to perform in the ways that you expected yourself to perform. So there's something there that could do with a bit more unpacking about temporal dovetailing.

0:10:48.3 SC: Does it relate to ongoing conversations that I'm very interested in about, emergence and strong weak emergence or just being able to coarse-grain the world in such a way that you have units that have explanatory power?

0:11:04.3 AC: Yes, well, I think it certainly relates to a lot of stuff about emergence because the idea here would be that by dovetailing things together in this way you get basically an emergent you. You are the emergent property, you the thing that goes around with a sort of sense of its own capacities but doesn't really care how they get cashed out. So something that I think comes out quite strongly in the predictive processing stories is that brains are kind of location neutral when they estimate where they can get good information back from. So they're very good at estimating what they're uncertain about and going about getting more stuff but it doesn't really matter where that stuff is. So as long as it's accessible, trusted, there when you want it, that's fine. And then there'll be a story to tell about how the actual selection process operates. But we'll come around to that once we talk about predictive processing. Let's stick with TXM.

0:12:04.5 SC: I mean, I guess one way that philosophers think about their job is that they're supposed to be carving nature at the joints. And does this count? Is that this? Is it a sort of betrayal of that goal because you're including all my smartphones and my, you know, card catalog as part of my mind? Or is it an improvement of that because really the action of our brains relies on all this stuff?

0:12:31.5 AC: Yeah, that's a very good and very delicate kind of question. So, you know, when Dave Chalmers and I put it forward, we tried to argue that this was the right way to carve nature at the joints because of functional similarities, basically. That said, you know, it doesn't intuitively always seem like the right way to carve nature at the joint. So I think that we're making a decision here, and that that decision, oddly enough, is in part a moral decision. So I'm very much moved by the analogy with prosthetic limbs, for example. If you took somebody with a well-fitted prosthetic limb and then looked at them and said, but you know, your basic physical capacities are just those of you without the limb, we would in a way be doing them a certain kind of injustice. And I think we'd be doing ourselves a certain kind of injustice if we treated people, say, with mild dementia that rely very much on a smartphone or on their home environment in just that kind of way. We would be sort of regarding them as less I think than they really are. So for me the sort of non-moral root, the kind of functional similarity root is a kind of stalemate, because it's kind of carving nature at the joints, and it kind of isn't.

0:13:55.9 AC: And then it's when you chuck the moral or ethical considerations into the pot, that I think you have a strong argument that overall, the best way to go is to err on the side of caution and buy into the picture of the extended mind.

0:14:10.0 SC: I bet that depends on one's definition of caution, but... [laughter]

0:14:15.8 AC: I think you're right.

0:14:16.3 SC: Is there intermediate, there probably is an intermediate stance in which your whole body, your physical body counts as your, what goes into cognition and the mind, but things outside the body don't. Is that a well populated space in the thoughts about this?

0:14:35.2 AC: Yes, I think that is reasonably well populated. Personally I think it's an unstable space so I think you better go one way or the other. I think that, you know, as soon as you start thinking, okay, if I'm using my fingers to help me count then my fingers somehow count as part of the machinery of mind, you know, a lot of people do feel that. I think that's true. But I think you can't think that and then simultaneously you think that the constantly carried pocket calculator can't count as part of the machinery of your mind. So yes, I think the body is often, for me, it's a very useful stepping stone, because I can suck people in by getting them to agree that continuous reciprocal interactions between brain and body do a lot of what really looks like cognitive work. And, you know, there are good examples of that from even things like the role of gesture as we speak, spontaneous gesture, by Susan Goldin Meadow and colleagues have shown that it kind of eases a certain sort of problem solving. If children are forced to explain how they solved a maths problem, but not allowed to gesture, they're actually a lot worse at it.

0:15:44.7 AC: So there's something going on, continuous reciprocal interaction that is probably allowing us to somehow offload aspects of work in memory into these gestures and they stick out there in the world, which is good news for philosophers who worry about hand waving. This is part of my thinking.

[laughter]

0:16:04.9 SC: Respectable cognitive tasks going on right there. Can we ask how this was different back in the day? You know, obviously a lot of the things we've been talking about are technological or modern. So was the mind of a human being 100,000 years ago very different?

0:16:24.5 AC: I think I would say it was, despite the fact that the brain of a human being 100,000 years ago wasn't very different. So yes, I would say that the mind of 100,000-year-old human was very different. But the brain obviously wasn't. But think of intermediate technologies before maybe we go back that far, things like just using pen and paper. And in particular, I'd like to think about the example of sort of scribbling while you think, the thing that many of us, those of us at least old enough to have been brought up with pen and paper do an awful lot of. And so as we try and think a problem through we write things down. I think it was Richard Feynman who has a rather famous exchange with a historian, Viner, I think the historian was, where Viner said, "So this is the record of your thinking." And Feynman said something like, "No, it's not a record. It's... " what did he say it was? It's not a record, it's working.

0:17:28.9 AC: You have to work on paper and this is the working. And I think there he had an intuition that this isn't just offloading stuff onto a different medium. This is actually part of a process that solves the problem.

0:17:42.8 SC: Do we see this then in other animals? I mean, I presume the answer is yes. But is it more or less dramatic depending on the species?

0:17:51.6 AC: Yeah, I mean, I think we humans do this to a really ridiculous degree and it's because we invented symbolic culture somewhere along the way, we came to create these arbitrary systems of re-combinable elements that we could then, you know, re-encounter as objects for perception. So creating these sort of structured encodings of in some sense our thoughts and ideas and putting them out in the world is a huge opportunity for any creature that is a good information forager, any creature that is very good at reaching out into the world to resolve some of its current uncertainty. Once again, that's a theme that we'll look back to later on. So humans do it a lot more. Other animals do it too. I don't think it's a purely human thing. It didn't just suddenly arise. You know, if you have a chimpanzee or an orangutan, better example, that constantly uses a stick to gauge the depth of rivers before going into the rivers, I think there's enough of a sort of weave in and robustness for that to count as part of a sort of extended cognitive loop.

0:19:02.0 SC: I guess I'm very interested in phase transitions, small changes in things that allow enormously different capacities, etcetera, clearly the ability to think and communicate symbolically was a big one, it opened up things, but I guess what you're saying is, one of the things that opened up is not just we can think more abstractly or generally, but we can think in literally different ways because these symbols allow us to more easily offload some of our cognition.

0:19:30.8 AC: Yes, I think that's right. I think that one thing we can do is we can use one of our biological capacities in very, very different ways, and that capacity is attention, so when we put something out into the world in that sort of way, you kind of clothe it in materiality that lets us attend to it in a whole bunch of different ways and interestingly I think by doing that, we can often break the grip of our own sort of over constrictive internal models of what kind of thing it is and how it might behave and how you might re-engineer it, so even think about something like designing a new train engine or something, you build a big scale model and then you start looking at it and poking it and prodding it, you can come up with all kinds of ideas that you wouldn't come up with just by kind of sitting down and trying to imagine all that in your head, so I think we are unusual in that respect, but it's a great power of us being perception action machines that we can really make the most of this, and then you look at current more disembodied AI, they've got really no use for this kind of strategy, the way they work is just not such that they're gonna start putting stuff out into the world and then poking and prodding it just to think better, they might do that for all kinds of other reasons.

0:20:50.4 SC: But is that their fault or ours? I mean, we haven't really given them the capacity to do that.

0:20:54.9 AC: I think that's right. I think it's our fault...

0:20:56.0 SC: Yeah.

0:20:58.0 AC: I think we've... To be honest, I think, well, it's our fault. We do design the class of tools that are really, really useful for what they're useful for, but I don't think actually that those tools will ever achieve what I would call true understanding because they don't have their grip on reality grounded in perception action loops, and it's that grounding in perception action loops that I think we're leveraging with material symbolic culture, when we just create these things and poke and prod them in different ways, we're super specialized for being good at perception action loops. So I think that's a... There's a trick there that disembodied AI is missing out, but then it gets all kinds of other things instead.

0:21:38.4 SC: Sure. Sure, it does, but... Okay, I'm just gonna download a little bit 'cause what you just said sparked several ideas, and it's pre-figuring things we'll get to later, but two things, one, an idea I heard more than 10 years ago that attempts to make AI systems suddenly work much better when you put them in a robot, they can go out there and interact with the world, and secondly, a podcast I did with Judea Pearl, who claims that babies spend all of their time making a causal map of the world by poking at things and seeing what happens. And I know that the whole prediction models that we'll be talking about are kind of this, like the brain making a model of the world, so all that together, do we imagine that if we do put modern large language models in an embodied context and let them go out and poke the world, they would change dramatically how they think?

0:22:32.2 AC: Yes, I think it would. There are companies out there that are kind of trying to do this, like Versus AI is one of them, and the idea certainly is to see what happens if you kind of leverage the active inference framework as a way of creating these sorts of new ecosystems of human artificial intelligences, although I wouldn't start, I think, with a large language model exactly, just because something like that has been trained to predict basically the next word in corpuses of text, and that's a very funny place to start, if what you want to be is a perception action machine seems much more like developmental trajectories where you start without that huge body of nonetheless maybe relatively shallow successor item information, you want to learn basics about causation and flow, and you probably want to understand those things in a way that is more grounded than it would be if you just knew how all the words about causation and flow tend to follow one another.

0:23:37.5 SC: It sounds like you...

0:23:38.6 AC: Of course that touts as an empirical question, it remains to be seen...

0:23:42.1 SC: Of course.

0:23:42.2 AC: Might be that if you bang a large language model in a good enough robot that it's just like giving a baby a super head start or something. Yeah, it'd be nice.

0:23:48.8 SC: But it does sound like you might be at least sympathetic to the idea that we need... Though I've had some people in the podcast advance that AI would be better off if we didn't just dump large data sets into deep learning networks and instead also poked and prodded the AI to have a symbolic representation of the world.

0:24:09.7 AC: Yes, I think that's right. I think, I do agree with that. I think if our goal is not good tools to think alongside, but rather something like artificial general intelligence is, so colleagues, if you wanna build an artificial colleague, then I think large language models are not the right place to start, but if you want a really lovely tool that can do an awful lot of work and that you can work with, then they're really interesting.

0:24:38.7 SC: Honestly, I don't want an artificial colleague, but an artificial graduate student or post-doc would be very, very helpful, or someone who could answer my email. I guess it would be weird not to ask the obvious question, I get annoyed when sometimes my philosophy colleagues seem to claim to have an immediate and obviously true view of what is natural and real in the world, but maybe I'm gonna do that right now by saying, I feel like I'm inside my body, I feel like the I, myself is located... Maybe even in my head. So is that just, I have old intuitions that haven't quite caught up to the modern world?

0:25:20.0 AC: No, I think that that's a valid intuition, if you like, because I think that we infer that we are wherever it is, that the perception action loop is closing, and so we're sort of... We're basic. We're perceiving the world and we're launching interventions and we're receiving the effects of those interventions. And that's such a fundamental part of how we learn about things, I think it's no surprise that that's where we think we are, notice that that's where we think we were even if part of our brain was located in a nearby cliff top communicating wirelessly with the rest of the brain, we'd still think, oh, this is where the perception action loop is closing. This is where I am. Dan Dennett has a beautiful old philosophical short story called Where am I where he plays with that idea where there's someone whose brain is in the cliff top, and they think that... Well, they think they are closing perception action loops using a body in the world, but then at some point, it gets cut off and suddenly they think, oh, now I'm claustrophobically stuck in the cliff top 'cause they knew their brain was there. Anyway, it's a beautiful story.

0:26:28.8 SC: No, that is very interesting and... Okay, so we might as well dig into this phrase that you're using over and over again, the perception action loop, and I think the word loop crucially important there, right? And maybe the way I like to think about it is, and perhaps this is true because of technology, but we have a naive metaphor for how the brain works as kind of like there's a video camera with a computer hooked up to it, right?

0:26:55.6 AC: Yeah.

0:26:57.1 SC: It's just taking in our sense data, processing it and doing something. And a big part of your message is, no, it's really not like that at all.

0:27:05.0 AC: Yeah, no, that's exactly right. I mean, let's think about one way in which it's not like that, if you think about something like running to catch a fly ball in baseball where you're kind of running to try and catch this, this ball that's flying out there, the way to do that isn't to take in all the information about the flight so far, and then plot where you think the ball is gonna go and then tell the robot, you as it were, go over there, instead what you do is you run in a way that keeps the apparent trajectory of the ball in the sky looking motionless as you look up there. It turns out that if you just keep doing that, then you'll be in the right place when the ball comes down, you need to do a bit more to actually catch it, but anyway, you'll be in the right place when the ball comes down, and there's a way of solving an embodied action problem that involves keeping the perceptual signal within a certain sort of bounds and then acting so as to... Well, so as to do that, basically something that, again, prediction error minimizing systems would actually be rather good at.

0:28:11.0 AC: So perception action loops I think the important thing to think about there is that they're not solving problems all in one go generally, it's sort of like, I do a little bit of this, I do something that gets more information while it keeps the perceptual stuff within bounds and then repeat the process again and again again until the thing is done.

0:28:32.0 SC: So I do appreciate your use of a USA-based sports metaphor there, [chuckle] but we have an international audience that's hopefully...

0:28:39.0 AC: Obviously I have no idea what it really means but I've read this.

0:28:44.4 SC: [laughter] But okay, so the motto then is, the brain is a prediction machine. And I just wanna sort of home in on what is the difference between that view and another view you might like... I think maybe people don't think about the brain too much, they would say, okay, sure, yeah, my brain makes predictions, but you really wanna put that front and center as the point of what the brain does.

0:29:06.8 AC: Yeah, yep. I mean, it's both the beauty and the burden, if you like, of this story, that it is really all this account, I'm told not to use the word story 'cause it doesn't sound scientific, and I'll use account, [chuckle] that it's basically saying this is a canonical operation of the brain, if you like, the canonical computations that the brain performs and that it strings together in different ways to do motor control and interoception and exteroception is basically one in which you're attempting to predict or the brain's attempting to predict the current flow of sensory information. Of course there are different time scales at which you can predict it, and the basic time scale, the [0:29:49.1] ____ is predicting the present, which of course is a rather funny use of prediction, you think prediction is about the future somehow, but this is a sense in which the brain is a guessing machine, it's trying to guess what the current sensory evidence is most likely to be, and then crunching that guess based on past experience together with the current sensory evidence, and that turns out to be a very, very powerful way of estimating the true state of the external work, of course it goes wrong sometimes, but it's a very powerful strategy, it allows you to use what you've learnt in the past to make better sense of what the raw sensory's energies are kind of suggesting in the present.

0:30:34.8 SC: This seems very related certainly to Karl Friston's ideas. We had him on the podcast, the free energy principle, the Bayesian brain, what is the relationship between all these ideas?

0:30:46.9 AC: Yeah, I mean, basically I'm just being an apologist for Karl Friston here, so Karl's basic picture, the free energy minimization picture is a sort of higher road version of the low road version that I give you, so the low road version comes kind of out of thinking about perception and thinking about action, whereas a high road version comes out of thinking about what it takes to be a persistent living system at all, you have to preferentially inhabit the kind of states that define you as the system that you are. That means that you end up in the states that in some broad sense you predict you ought to be in. So in that broad sense, the fish kind of predicts it ought to be in water because its actions are all designed with that in mind. The low road is a bit less challenging, I guess that's really why I take it. The low road is just sort of saying that we have good evidence that the canonical computations that human brains and the brains of many other animals perform involve this attempt to guess the sensory signal, and it turns out that you can use that to do motor control in a very efficient way.

0:32:08.2 AC: It's been known for a long time, I think, that it's important in perception, and the other thing that I think Karl Friston's work has really done is being shown that that old story about perception becomes so much more powerful when you see that it's got a direct echo in an account of action. So the idea there is that perception is about getting rid of prediction errors as you try to guess the state of the world using everything you already know, an action is getting rid of prediction errors, but it's getting rid of them by changing the world to fit the prediction so you have these two ways to get rid of prediction error, you find a better prediction or you change the world to fit the prediction you got. One is perception, the other is action.

0:32:55.5 AC: But they are performed using the same kind of basic neuronal operations, obviously there are differences between motor control and sensory perception, but if you look at the wiring of motor cortex, it turns out that it's actually wired pretty much like ordinary sensory cortex, in fact, so much so that many people don't like to talk about sensory and motor cortex just for that reason, so these stories or these accounts give you a sort of principled way of understanding that in each case, you're predicting a sensory flow, but in one case, you're trying to retrieve an old model of the world to fit the flow, and that's perception. And in the other case, you're trying to change what you're getting to fit the prediction, we can circle around to that, I think I can say that a bit better in a moment.

0:33:47.1 SC: Is the idea that it's the same neural circuitry that is doing sensing and control or is it that the two circuitries look parallel?

0:34:00.1 AC: Yes, it's that they look very much parallel.

0:34:04.2 SC: Okay.

0:34:04.3 AC: That they operate in the same sort of way, the reason they're not the same is a proprioceptive prediction is playing a very special role in motor control, so the idea is proprioception is the sort of system of internal sensing that lets us know how our body is currently arranged in space, so the idea is that you, in order to reach to pick up the Coke can that's in front of me, I predict the proprioceptive flow that I would get if I were reaching for it and then I slowly get rid of those errors by moving the motor plant in just that sort of way, so you get rid of the errors by moving the arm, in this case, to reach out for the Coke can. So proprioceptive predictions are especially placed here, they act as motor commands, the other kinds of predictions don't.

0:35:00.2 SC: So it's... I hesitate to use the word think here because thinking and cognition and thought all have technical meanings that are slippery to me, but the idea is that your brain sort of thinks intentionally or unintentionally that your arm is somewhere slightly different than it is, and then the muscles move it to make that more accurate?

0:35:23.7 AC: That is one way of putting it and people have put it that way, so some people have said, look, you've gotta kind of lie to yourself here, the brain sort of got to lie to itself, it's got to ignore all that good information that says that my arm isn't moving, it's got to predict the trajectory of sensation that you would get if it was moving, and that prediction is given so much weight that the other information is overwhelmed and off it goes as it were, you get rid of the errors by moving it. I'm not sure personally that's exactly the best way to put it. I kind of think it's really about attention, so I think it's like you've got information that says that you're not moving the arm, you've also, because of your current goals, you're predicting this proprioceptive flow that would correspond to reaching for the Coke can, and by as it were, disattending to the information that says that it's stationary, you allow the other prediction to do its work, so I think it's sort of targeted disattention rather than actually lying to yourself. I'm not quite sure I prefer that. I think we're probably saying the same thing, you know, the math and the models all work out just the same.

0:36:36.7 SC: Alright, okay, good.

0:36:37.0 AC: But I think if we call it targeted disattention we understand it a bit better. I think we... It makes more contact with sports science, for example, where you don't really want to be attending to the position your body is currently in. You want to attend to something like how it ought to be, you want to in-train yourself by knowing what it would feel like if you were doing it right, right now.

0:37:03.0 SC: So is it similar to the idea of file compression, you know, if you have a JPEG or an MPEG, that there are some patterns that are repeated so you don't have to keep track of every pixel, you can just assume that they keep going, and then you pay attention to the differences, and those are what we're to talk about as errors in the brain case?

0:37:26.0 AC: Yeah, that's certainly correct, when we come back to thinking about perception and something like that is involved in action too, but it does seem like it's easier to think about that bit in the case of perception where the idea would be that I predict a current sensory flow and it's only the errors in that prediction that then need further processing. So, if I think I've woken up in my bedroom and I predict a certain sensory flow, and as I turn my head around, I'm not getting any information that corresponds to that. I might have to go scrabbling or use these prediction errors to retrieve the information. Actually I'm in a hotel room and it's gonna look pretty different. So I think it's in that sense that when you don't have to scrap all that hard, this is a super, super-efficient way of doing moment by moment processing. Because if you already predicted it properly using what you know about the world, you don't need to take any further action.

0:38:28.6 AC: So in that sense, it's exactly like JPEGs and motion-compressed video where the idea is, if I've already transmitted the information about this frame of the video, then in order to know what the next frame is, all I need to do is react to whatever the errors would be if I assumed it was just like the frame before. And normally then there's a few residual errors and if you use those as the information that you need to deal with, you get the right picture. So these prediction errors, these residual errors, they carry whatever sensory information is currently unexplained. So explain whatever you can with prediction and then just, you don't need that much bandwidth really to just use the rest to refine it.

0:39:13.0 SC: I remember hearing, it might have been in a conversation with David Eagleman here on the podcast, the idea that the reason why the years seem to go by faster as we get older is because there's less novelty in our lives. The first time we go to a beach, it's all new and we really expend a lot of mental energy taking it in, whereas the 20th time we already have a background and the errors are not that big. Does that fit into this story? It sounds like it does.

0:39:40.0 AC: Yeah, I think that fits really, really well. It also helps explain why it's so hard to learn new things at a certain point in your life because the inputs that you are receiving get sucked into these well-worn troughs in your current world model, if you like. I think us academics find that a lot as we get older.

[laughter]

0:40:00.7 AC: It's something like, "Oh yeah, I understand that. Yeah, that fits with this story that I've already got." Sometimes it's very, very hard to give the new evidence it's proper due. And of course attention is a tool for doing that, but that takes a lot of deliberate effort sometimes to really, really attend. Attention reverses the dampening effect that prediction normally has. So part of the evidence base for this story, this account, is that well-predicted sensory inputs cause less neuronal activity than other sensory inputs. So there's something odd there. These are the ones we deal with very fluently, and yet there's less going on in the brain when you deal with them than in other cases. So that's one of the signatures that led, I think, to predictive coding coming on the scene.

0:40:57.9 SC: You open a can of worms when you say how the old academics get stuck in a rut there, so I have to just follow up a little bit. I think there's two different perspectives I could put forward. One is more or less what you just said, as we get into our ruts, it takes more and more energy, activation energy or whatever to think in new ways. But the other is... Maybe this is more optimistic, because we are older and good at certain ways of thinking, we forget that it was hard to not be good at any ways of thinking. And we forget how hard it was to learn Calculus or French or whatever, and therefore we're just not willing to put in the work anymore, even if it were exactly the same amount of work.

0:41:40.8 AC: Yes, I think that's right. We end up in a situation of expert perceivers in general where expert perception clearly is being able to take this unruly sensory information and just see what it actually means for victory on the chess board or whatever you happen to be engaged in. So I think in a way, all successful perception is, is expert perception in that sense. And academic expertise is in the same sort of ballpark. And it has the same sort of blind spots as well. So, if there is something interesting going on but it doesn't fall within the bounds of the existing generative model, to use the right vocabulary for the predictive processing story, then the only thing you can do with it is use it to drive tortuous new slow learning, you can see why a lot of time we don't really wanna do that.

0:42:38.3 SC: [laughter] Don't wanna do that. No. Well look, relatedly, you used the word efficiency as one of the benefits to this model. If we think of probably the first ever video storage software was more or less like a movie where you just had every frame and you stored it, right? And then only later did you realize it was much more efficient to just update the changes presumably since our brains were not intelligently designed and grew up through evolution, this is a nice feature to have. We're trying to do the most with finite resources. Is that a big part of the attraction of this story, that it requires less thinking or energy or calories or what have you?

0:43:20.1 AC: Yes, I think that's right. I think if the brain didn't work this way, but we were able to do the kinds of things that we do, we'd probably have brains that overheated repeatedly. The amount of energy, there is a trade off here. The trade off is keeping the world model, the generative model that you are using to make the predictions going. And that of course is a big metabolic cost. That's the cost that is being... Whose signature you see in spontaneous cortical activity. It's this stuff going on all the time, all the time, think about the resting-state work from [0:43:58.3] ____ and others as well. So that is a cost, but that is traded against the moment by moment cost of processing all this incoming information.

0:44:07.8 AC: So I think what we're doing is we've traded the cost across time there and we're using a fairly metabolically expensive upkeep of a world model to allow a much more efficient moment by moment response, a faster one as well. So, being somewhat anticipatory about what's going on in the world around you is a pretty good thing if you're an animal involved in all kinds of conflicts and dangerous situations. And also there are wiring costs. It's hard to know how best to think about the wiring cost, but the amount of downward flowing wiring and information seems to very often outnumber the inward flowing stuff by sometimes up to 10 to one. Certainly four to one.

0:45:00.4 SC: Okay.

0:45:01.5 AC: So it looks as if brains have decided, it's been decided over evolutionary time that this is a good thing to do. And I think that that means it is overall the most efficient way to proceed. I also worry that in a way, if you want understanding, there might be no other way to proceed. And that's maybe there's something more abstract or philosophical going on there, but I don't know what understanding would look like if, as it were you weren't bringing a world model of some kind to bear on the current sensory evidence in a context sensitive way. So there's a question there that I don't know, I really don't know the answer to because I know there are a formal proofs. So anything that can be done using a system that has feedback like that can be unfolded into a feed-forward system.

0:45:56.2 AC: Maybe the upshot there is, well, maybe you could unfold us into a feed-forward system for a few bits of processing or something, but it would require a huge brain and ridiculous amount of energy. But somebody out there might want to think about that.

0:46:12.1 SC: Okay, good. Yeah, exactly. I was gonna say, this does sound like a research project for somebody, but there's the benefit of this mechanism that the brain uses, the predictive processing model. Presumably there's also downsides, maybe one is that we're very susceptible to illusions, right? We think we're seeing things that aren't there because it's part of what our brain is predicting very, very strongly.

0:46:37.4 AC: Yes, absolutely. I'm subject to several of these illusions. I'm sure we all are. Phantom phone vibration is probably a good one. You very often might think that phone's going off in your pocket when it's not even in your pocket. I'm now susceptible to phantom wrist vibration since I gave in and bought a smartwatch. The hollow mask illusion, I guess, is a classic in this area where an ordinary Joke Shop mask if viewed from the concave side, so viewed from behind in a certain sense with a light source behind the mask, you'll think that it's an ordinary outward-facing face that you're seeing. Again, that seems to be because we have very strong predictions about the concavity of normal face structures, we very seldom see anything that isn't like that. And that prediction now trumps the real sensory evidence specifying concavity except it doesn't in everyone. And so, autism spectrum condition folk are slightly less susceptible to that illusion and to the McGurk illusion, for example.

0:47:49.2 SC: What is that?

0:47:49.2 AC: From a predictive processing viewpoint, that's probably because of a slightly altered balance, one where sensory inputs are somewhat enhanced relative to expectations.

0:48:00.8 SC: What was the other illusion you mentioned? The McGurk...

0:48:02.4 AC: Oh, the McGurk. This is a sort of, it's like a ventriloquism illusion. Yeah. Sorry. It's this one where it's a sort of Berger illusion. There is a sound which can be played such that if the lips are moving a certain way, you hear it as gar, and if they're moved in a different way, you'll hear it as barb, but it's exactly the same sound.

0:48:26.1 SC: Exactly the same sound.

0:48:26.2 AC: So they're not entirely clear whether that's an illusion or something else. It's hard to know exactly where the boundaries of illusions and other kinds of inference lie.

0:48:39.0 SC: These optical and auditory illusions almost seem fun and benign. But presumably we can take the basic picture that there's an enormous amount of information coming into our senses at every moment and we can't possibly process it all. Therefore we filter it. We filter it to fit our perceptions and then correct for the errors, that must also work with abstract concepts or news items just as much as pictures that we get through our eyes.

0:49:12.4 AC: Yes, I think that's right. So, there is a tendency to not just to see what we expect to see, which can be very damaging too but sometimes to read what we expect to read. Well, by read there, I mean read into a text what you expect to be learning from that text. I think we probably, many of us do this, so in fact all of us probably do this when we read news articles where it looks like they're saying something that they're very much either sympathetic to or opposed to, but maybe when you look at that text again or you go over it with a fine tooth comb, or you look at it with someone that's got a different perspective, you realize that's not really in that text. It's just what I brought to bear in some way.

0:49:57.5 AC: In the visual case, Lisa Feldman Barrett has this lovely but very, very scary example of the way that interoceptive predictions, predictions about your own bodily state are getting perhaps tranched together with exteroceptive ordinary sensory information in ways that could perhaps give you experiences where a weapon or... Sorry, a weapon, where an object that is reached for in a dark alley might actually appear to you as a gun when really it's just a smartphone. In sort of... In much less worrying and more controllable cases, you can show that if you give people false cardiac feedback, so you make them think their heart's beating faster than it is, then a face that would otherwise look neutral to them is judged to be an angry face or a worrying face somehow. So it does seem as if we are using internal information to help make the predictions that structure our experience of the external world. And so, that's something that can go right or go wrong as well.

0:51:18.1 SC: So we end up seeing what we expect to see and maybe even believing what we expect to believe in some sense.

0:51:26.5 AC: Yes, there is a sort of... Some people say seeing is believing, but in these cases, believing is seeing, and maybe in these interoception cases feeling is seeing as well. That's the way that Feldman Barrett puts it.

0:51:41.1 SC: Another former Mindscape Podcast guest, I gotta say, Lisa Feldman Barrett. Are there therefore features or things out there in the world that we are systematically bad at perceiving because of this way that we process information?

0:52:00.4 AC: That's a lovely question and one that I haven't thought about. So things that we just all of us tend to miss because, well, I suppose the most obvious answer there is unusual events. So, you would've seen the footage of the guerrilla walking across the scene where you are trying to count the passes of the baseball. This is work by Simons and Chabris. Anyways, it's classic, it's classic work that in sort of attentional blindness and... Sorry, inattentional blindness. So the idea there is if you're concentrated on one task, something really quite dramatic could happen and you just wouldn't notice it. And I think we're all very, very subject to that.

0:52:48.9 AC: So outliers, I suppose that's the thing. Predictive brains are tuned in to patterns that have helped them solve problems before. And so whenever we are confronted with an outlier situation, we're likely to miss it unless for some reason we're attending right there. But why would we be, because attention is driven by where we expect the good information to be. And this is why, for example, expert drivers are very, very good at a lot of things, but they can miss a cyclist if they're approaching a roundabout from entirely the wrong direction. Somewhere that, you know, no one comes from that direction at the roundabout. So there's a lot of these looked-but-didn't-see as they call them, accidents, where people might even move their heads in that direction. But if it's that unexpected they just don't see what's going on.

0:53:43.6 SC: Presumably these are all quantitative questions. If something is blatant enough, we're gonna see it even if we didn't predict it. Is that fair to say?

0:53:52.2 AC: I think yes, that's fair to say as long as we are able to attend to it. So it needs to be able to... And some things will try and grab our attention. So like a loud noise, if a really loud noise like those scaffolders I had out my window earlier happens, then that will grab my attention unless I'm really, really desperately focusing all my attention somewhere else as I might be. So if you think about stage magic, stage magic is a really nice case where rather dramatic things can suddenly appear on stage and most people don't notice 'em because attention has been so very, very carefully controlled by the magician. 'Cause attention is up in the waiting on either specific predictions or prediction errors. So it's a really, really super important part of the predictive brain story, this balancing act that is varying moment by moment.

0:55:00.5 SC: That's a great example. I like the idea that professional illusionists are just leveraging the fact that our brains are predictive processors. [chuckle]

0:55:07.6 AC: That's true. Yeah. No, there's a beautiful book, I can't remember the title now, but it's by Louis Martinez and it is a book written by neuroscientists about stage magic leveraging the picture of the predictive brain as a way of understanding a lot of it.

0:55:21.7 SC: Wow.

0:55:22.4 AC: So yeah.

0:55:23.5 SC: Very, very good. Okay. So let me then put on the skeptical hat just a little bit. If... And this is similar to questions that are raised for Carl Friesen, et cetera. Look, if our brain is trying in some sense to minimize prediction error, can't it do that best by not collecting any data, [laughter] just by hiding away and being completely unsurprised 'cause we never leave our room?

0:55:49.0 AC: Yeah, I think that's such a nice worry to have because it leads right into this hugely important dimension of interoceptive prediction and artificial curiosity. So let's maybe talk about these things for a moment.

0:56:01.8 SC: Please.

0:56:01.9 AC: So there is this sort of worry that is sometimes called the darkened room worry, that the best place for a predictive brain to be would be to lead us into a dark corner and just keep us there. So all of those sensory inputs, they just keep on coming. Just a saying, you predict them perfectly, but you wither and die. Now, we don't do that, obviously. Does that mean the predictive brain story is wrong? Obviously, I don't think so. I think what it means is that there's more to the predictive brain story than just bringing exteroceptive sensory information into line with predictions. We're making all these interoceptive predictions all the time. I'm predicting the state of my own body, I'm predicting, for example, that I should at all times have sufficient supplies of water and glucose, example, and then action is automatically taken, not when I don't have enough water or glucose, but long before I don't have enough water or glucose. So if you start to feel thirsty, that will be happening before you've reached the point at which you're gonna wither and die without water. And if you take a drink when you're thirsty, you'll feel relief, but that water will have no effect on you physiologically for about 20 minutes. So the relief is as much a prediction as the original thirst was. Again, it's an example from Lisa Feldman Barrett.

0:57:32.9 AC: So once you put interoception in that way into the picture, then, of course, we're not gonna stay and not eat and not drink in a darkened room because we have these kind of chronic systemic expectations, if you like, of staying alive. But then there's a bit more to it than that, I think, as well 'cause we don't stay in boring rooms either. So you could put me in a very boring room, but give me enough food and water and all of that stuff and I wouldn't like that very much either. I might even start to do things, I might start to play games by drawing on the wall or something like this. And so this now falls under the umbrella of kind of working artificial curiosity. And predictive brains are naturally artificially curious brain... Oh sorry, curious brains, because minimizing prediction error is a basic reward. So you could sort of say for brains like that, the only thing really that's rewarded, it's minimizing prediction error. That's what they want to do. And if they're not performing any particular task, they'll still try and find some prediction error to minimize 'cause that's the kind of thing that they are, and this is what makes predictive brains general purpose structure learners.

0:58:50.2 AC: So there's some rather nice work that's been done by Rosalyn Moran at UCL. I think it's UCL. And what she's been doing is comparing reward-driven learners with prediction-driven learners and finding that the purely reward-driven learners will learn a way of solving a problem more rapidly than the prediction-driven learners very often, but frequently a more shallow way of solving the problem because as soon as they find a way to reliably get their reward, they kind of stick. Whereas a prediction-driven system, it basically wants to minimize prediction error as much as possible, and that leads it to explore its environment repeatedly learning more and more about it, and if you then seed it with a goal, and you do both these things simultaneously mostly, it will outperform the pure reward-driven agents. So I think when you put those two things together, you kind of see that the darkened room isn't really much of a threat. We don't like death and we don't like boredom, and both of those things seem to be natural effects of being driven to minimize errors in prediction.

1:00:05.7 SC: I guess the way out that comes to my mind directly, which I'm not sure if it's the same thing as what you are proposing or it's something different, but in physics, when we calculate the entropy of a system and we say it's at maximum entropy, that's telling us some distribution of all the possible states it could be in, blah, blah, blah, but famously, we can calculate maximum entropy of a system subject to different constraints, like subject to it's at a certain temperature or it's at a certain pressure or whatever, and we'll get different forms for that probability distribution. So couldn't we just say that there are two things going on? We want to minimize prediction error, but we also want to survive, so there's a constraint, we want to survive, and under that constraint, it's actually useful to go out and be surprised sometimes so we can update our predictive model, and I think that would... I don't know how mathematically that will work out, but it does seem a little bit intuitive to me.

1:01:08.6 AC: Yeah, and I think that does mathematically work out. I think this is the sort of stuff that Karl Friston can speak to more reliably than I can. But it looks as if very often, the correct move for a prediction-driven system is to temporarily increase its own uncertainty so as to do a better job over the long time scale of minimizing prediction errors, and that looks like the value of surprise, actually, and that we will... I think we artificially curate environments in which we can surprise ourselves. I think, actually, this is maybe what art and science is to some extent, at least, we're curating environments in which we can harvest the kind of surprises that improve our generative models, our understandings of the world in ways that enable us to be less surprised about certain things in future.

1:02:04.8 SC: I wonder if you could use this idea or set of ideas to make predictive models for what kind of games people would like to play, or what kind of stories or novels or movies people would like to experience. You want... Or I guess in music, it's very famous, you want some rhythm, some predictability, but you also want some surprise also. There's a sweet spot in the middle.

1:02:27.1 AC: Yes, I think that's exactly right. Karin Kukkonen, a literally theorist in Scandinavia somewhere, who's written a nice book called Probability Designs, and so she is using the vision of the predictive brain as a way of understanding the shape of literary, materials, poems and all that.

1:02:49.4 SC: Okay, there you go.

1:02:49.4 AC: Her idea is that we should think of every novel, every poem as a probability design leading us through sort of building up expectations, cashing them out, building up at multiple levels, given precision, waiting to some of the expectations versus others, and clearly, this makes sense of music as well. There's an awful lot of that going on in music, and probably applies to all sorts of things. Even like roller coaster design, I imagine, is exactly that. A roller coaster is a kind of probability design. What's interesting is how we get surprised again and again even if we ride the same roller coaster or read the same novel, or listen to the same piece of music. And I think that shows a skill of the constructor in giving us inputs that activate bits of our model, drive in expectations again and again to the point where you're still surprised in some sense. Even though you could have said beforehand that's what's gonna happen, you still can't help but be surprised, and this, in some sense, at some level would be the best thing to say.

1:03:53.0 AC: And I think this speaks a little bit to the idea that as prediction machines, we are multi-level machines. We're not just... It's not just there as a prediction and it's either cashed or it isn't, but this prediction exists as a high-level abstraction predicting a lower level one, predicting a lower level one, predicting a lower level one all the way down to the actual incoming notes of the chewing our words on the page. It's because we're multi-level prediction machines, I think, that things like honest placebos work. So placebos, obviously, fall rather nicely under this sort of general account because expectations of relief thrown into the pot can make a difference to the amount of relief that you feel. But if you're told that you're being given a placebo, it can still make a difference to the amount of relief that you feel. Presumably that's because there are all these sub-levels of processing that are getting automatically activated by good packaging and delivery by people in white coats with authoritative voices and this little thing. So I think that's something that we might learn from these accounts too, that we should... Maybe medicine and society could make more use of ritual and... Yeah, ritual and packaging and things that, in some sense... I don't know. In some... Well, I won't say ineffective, what I mean is they bring about their effects, but not through the standard routes.

1:05:25.0 SC: Good. Okay, very, very good. Okay, we're late in the podcast. We can get a little bit wilder and more profound here. So in the book, you gesture toward ideas along the lines of we are not only... The thing to get in mind is that we're not just sensing reality and writing it down, we are in some sense participating and bringing it about. Maybe even using phrases like what you think of as reality is really a hallucination. Tell us exactly how far we can go along that rhetorical road.

1:06:01.6 AC: Yeah. Proceed with caution would be the right thing to say, because lots of people talking about these things, myself included, use this phrase, perception is controlled hallucination, and you can see why, because the idea here is that perception is very much a constructive process in which our own expectations get thrown into the pot and they help... You see what you see. And those same expectations are thrown into the pot of feeling your body, the way that you feel it. And so a lot of our medical symptoms, in fact, all our medical symptoms, reflect some kind of combination of expectation and whatever sensory evidence the body is actually kind of throwing up at that time.

1:06:50.7 AC: So this is really a very, very powerful story, but when you think about perception as controlled hallucination, we need to take the notion of control pretty seriously. For that reason, in some slightly more philosophical works, I've tried to argue that we should flip the phrase around and think of hallucination as uncontrolled perception, and that that as it were puts the boots on the right foot somehow. It let's us say that when these things are working properly, you're in touch with the world. This is a way of using what you know to stay in touch with the world as it matters to an embodied organism like you trying to do the things you're trying to do. But then, of course, when it goes wrong, when the perception is uncontrolled, if you like, then you get hallucination. You will get a hallucination when you're kind of disconnected from the world and your predictions, your brain's predictions are doing all the work, and then if you turn the dial in the other direction and your brain's predictions aren't doing enough work, you fail to spot faint patterns in noisy environments. You can be easily overwhelmed. So I feel like thinking of hallucination as uncontrolled perception is actually the better way to do it, even though it's clumsy to saying it doesn't even roll off my tongue that easily, which is why I don't think I actually bothered making that move in The Experience Machine book.

1:08:21.9 SC: It's really hard to resist drawing a parallel with large language models here because, of course, their entire job is predicting what's supposed to come next, right? And guess what? They famously hallucinate, they say things that are completely false, and interestingly, they do things with apparent confidence. They don't hesitate or mumble when they're hallucinating. To them, it comes out just as definitive as the truth does, and maybe there is a parallel there.

1:08:51.1 AC: Yes, I think that's right. I mean, they are... Their hallucinations are in a way, I think, partly at least, the result of them not being anchored in perception action loops in the right sorts of ways. So it's this anchoring in perception action loops that sort of teaches us a lesson when we're young. It's like if you get things wrong, bad things are gonna happen to you. If I don't spot the edge of the path as being the right place and I'm gonna fall over and I'm gonna get signals that I chronically don't like as it were, so all the interoceptive predictions are coming into play there. Whereas if all you're doing is predicting the next word in a sentence and your reward is basically being kept alive as a large language model, then why not? Just go the whole hog and be confident about a nice structured piece of bullshit that you can generate. At the same time, it's interesting that by changing the prompts to the large language model, to chatGPT, anyway, you can make it do substantially better. So you can say something like, "Write this for me in the style of a well-informed scientific expert." It makes less mistakes and it still tends to hallucinate references, but [chuckle] at least it does a little bit better.

1:10:13.8 AC: But yeah, so something very thin about just predicting the next symbol, I just feel like it's not very well-anchored in reality, and so hallucinations are kind of... It can't tell the difference between a hallucination or something else. Maybe that's the point. Unfortunately, when we are in the grip of hallucination, we can't tell the difference either.

1:10:35.6 SC: Someone on Twitter coined the term hallucitations, when chatGPT makes up papers you haven't written. [laughter]

1:10:42.4 AC: I like that.

1:10:43.5 SC: Yeah, they appear all the time. I'm gonna start including them on my CV. Are there implications, if we get down and dirty and not philosophical, for how to treat mental issues that we have, whether it's depression or pain or anything like that, or do we get actionable intelligence from this way of thinking?

1:11:06.8 AC: Yes, I think we do. I mean, it's early days, but I think particularly in the case of pain, there are some clear sorts of recommendations here that are being implemented by people working in what they call pain reprocessing theory. It's just kind of a high pollutant label for the idea that you reframe your pains. So the thought would be that we tend to treat pain as a signal that we shouldn't be doing something, but if you reframe it as, "Okay, my pain signaling system is misfiring," then you can begin to think, "So this pain doesn't mean that I shouldn't be doing this." And it turns out that if you get people involved in those regimes, they start to be able to do a bit more because they're not scared of stopping because of the pain. And actually, as they find that they can do more, the pain itself presents itself to them as lessening, I think, because the brain sort of infers, "Well, if I'm doing this stuff, it can't be that bad, can it?" So there's a kind of virtuous cycle that replaces the vicious cycle that was there before, which was, "This is gonna hurt, so I'm not gonna do it. And then if I do start to do it, oh, it really seems to hurt. I'm not doing it."

1:12:26.8 AC: So pain reprocessing theory is one nice case. I suppose self-affirmation is another case, the idea that if you're prone to thinking that perhaps you're not gonna do well in some test because you're in a certain minority group or you're female and it's a math test or something like this, self-affirmation in advance of the test can really make a difference. "I'm good at this, I can do this, lots of people like me do this," that kind of thing. It's important, of course, not to over-egg the custard, as we might say on this side of the Atlantic anyway. You can't reframe having the sort of bacterial infection in a way that is really gonna make any difference to the bacterial infection that you've got. And reframing makes a big difference to cancer-related fatigue, it doesn't make much difference to cancer. So I think we have to be aware of the limits. And in general, I don't think that these stories, these accounts are kind of positive-thinking sorts of account, they're kind of... They're more like... There are many factors that are involved as we construct our experiences, and some of those factors are our own expectations.

1:13:52.8 SC: Yeah. Okay. No, that sounds perfectly sensible put that way. I'm not an expert, but my rough impression is that we know embarrassingly little about pain and how it works. It's an understudied area, I guess. I don't know whether it's for moral or psychological reasons, so any little insight might be very helpful.

1:14:10.0 AC: Yeah, yes, I agree. And I think that... I think pain research is actually moving into some very interesting stages now as we understand more about the ways in which people's own expectations make a difference. So even where there's a very standard physiological cause, people's experiences of their pain vary tremendously, and even within a certain individual, their experiences vary tremendously from context to context and day by day in ways that just aren't tracking the organic as well... I don't like this word organic. There's always something organic going on. Aren't tracking the sort of the standard, the standard cause. So what's probably happening is that different contexts are activating different expectations of pain or disability, and without the standard organic cause change in any way, that's making a difference to how you feel and what you can do.

1:15:07.0 SC: I actually just realized I forgot to ask a crucially important question earlier. We had Jenann Ismael on the podcast a while back, my new colleague at Johns Hopkins, talking about physics and the arrow of time. And through talking to her and through talking to other people, I have this vague idea of why we think that time flows, why we have the sense of time passage, and it's because we are constantly predicting a moment in the future and also remembering a little bit in the past and updating. And the updates happen in one direction of time, and that's what gives us the sense of flow or passage. Given your expertise, does that sound at all on the right track?

1:15:50.0 AC: Yeah, that sounds very... That sounds like a really nice story to me, or account even, it's account there...

1:15:56.3 SC: Account. I like story. Go ahead with story.

[chuckle]

1:16:02.7 AC: It reminds me a little bit, actually, of Husserl, the kind of classic philosophical phenomenologist who had this idea that experience is this sort of... This thing which is rooted in the past, but always looking towards the future and the present is this sort of just where those things meet, whether... I don't know what really gives us the arrow of time, that sort of sense that the idea that you can't sort of unscramble the egg or whatever it is. It's not obvious to me quite why my prediction machinery is kind of what's delivering the fact that it looks like I really can't recreate the egg from the scrambled egg.

1:16:54.6 SC: Well, I think...

1:16:54.9 AC: But that's one for you. That's definitely one for you we're doing.

1:16:56.5 SC: That is one for me, and I think that I halfway agree in that the account has not been fully fleshed out, although I'm 100% sure it's ultimately because entropy is increasing. We just have to draw the connections there, which is the useful work could be done.

1:17:10.8 AC: It sounds like a great account of why we think there's an arrow of time.

1:17:14.5 SC: Yeah, why we feel it, right, why that's part of our image of the world. Okay, so then the last question is the flip side of the pain question. There's this idea called the hedonic treadmill, which I think some people have said has been discredited, but the idea that we get happy not because of our overall welfare, but because of changes in our welfare. And if we win the lottery and now we're rich and living in luxury, soon we have exactly the same happiness as we had before. There are challenges to this view, so I'm not even sure if it's true, there's a replication crisis in psychology, everything is very [chuckle] easy to me, but it does seem compatible with the whole predictive modeling view of what the brain is doing. If what we're doing is constantly predicting what's happening next, then can happiness be understood as noticing that our prediction was a little pessimistic and things are actually a little bit better?

1:18:11.1 AC: Yeah, that's interesting. I haven't thought about this, but it sounds like it should fall rather neatly into place with this sort of dampening of the well-predicted. So the fundamental starting point for a lot of these accounts was that the neuronal response to well-predicted sensory inputs is dampened. So if these are sensory inputs that are supposed to be driving pleasurable experiences but you've really been through that 100 million times before, then the pleasure is, I think, going to be diminished, perhaps unless you can actively reach in there with attention and try to stop that happening. So I wonder whether someone that really loves the taste of a particular wine and they've had it a million times before, as long as they can reach in with attention and up the dial on what's coming in through the senses, then maybe they can sort of artificially surprise themselves a little bit, if you see what I mean.

1:19:10.6 SC: I do.

1:19:11.6 AC: I know it's more about that, but I feel like there's something there of wine tasters are told to do this, to sort of sit back and kind of let it speak for itself so that you don't get sucked into your own expectations.

1:19:24.2 SC: But now it brings up questions. I know I said it's the last question, but there's an issue here of high versus low pleasures or simple versus subtle pleasures, right? I mean, I'm a big wine fan and I absolutely do get pleasure from very fancy, complicated, sophisticated wine, 'cause I can't afford to have it too often so that it's not boring to me, but I also get pleasure from the perfect slice of pizza, which is very simple and predictable and whatever, but I get that comforting pleasure. So now I'm not sure what to think.

1:19:55.9 AC: Yes, I think I'm not quite sure what to think about those cases either. It does seem to me that we... Because our brains are prediction-minimizing engines, then in a way, we do kind of want to live in worlds that we can predict well and have certain sorts of things, and so when those things are bringing hedonic benefits, then I think existing there is going to be a rather comfortable way of existing, even though we also have a sort of accompanying drive to increase our states of information, to sort of learn a bit more in case it lets us generate a slightly alternative future in which the pizza is square, but we're liking it even better or something. But I think that... I think that if we think about these things delicately as sort of multi-level and multi-dimensional prediction engines, then we can accommodate both the drive, the novelty and the attractions of staying within the space where actually all that stuff that wants us to minimize errors is doing rather well in a local sense. Even just looking at a pizza, you're minimizing lots of errors. After all, just to see the shape of the pizza in front of you, you're kind of moving your eyes around, harvesting information, minimizing errors, you're getting some hedonic kick out of it, nothing bad is happening anywhere. It's a pretty comfortable place to be.

[chuckle]

1:21:27.6 SC: I'd like to leave messages for the young intellectuals out there who are deciding what to do with their lives. It sounds like there's a sweet spot here where we do know something about the brain and the body and how they work and how they fit together, but there's still a lot of good questions left on the table to be answered by the future.

1:21:45.6 AC: I think there's a huge number of questions. Every story, every account we've had so far has turned out to be wrong, and I'm sure this one will too, so the question is, what's it a stepping stone towards?

1:21:57.2 SC: Well, it's a very good story you're telling us, Andy Clark. Thanks so much for being on the Mindscape Podcast.

1:22:01.6 AC: Thank you. It's been a real pleasure. Thank you.

[music]

This programme came just as I have been discovering the concepts around extended cognition in spiders. Andy Clark mentioned the example of apes using sticks, but the extent to which spiders utilise their silk to allow them to do advanced problem solving and learning is really remarkable. H F Japyassu and Jackson and Nelson have published remarkable accounts of this.

I’m sure there are many more examples in nature, and our own rapidly expanding use of our own “web” seems very relevant to this fascinating discussion.

You are not predicting what would happen, you are predicting wat does happen. However, what does happen depends on what can yet change.