Dark matter exists, but there is still a lot we don’t know about it. Presumably it’s some kind of particle, but we don’t know how massive it is, what forces it interacts with, or how it was produced. On the other hand, there’s actually a lot we do know about the dark matter. We know how much of it there is; we know roughly where it is; we know that it’s “cold,” meaning that the average particle’s velocity is much less than the speed of light; and we know that dark matter particles don’t interact very strongly with each other. Which is quite a bit of knowledge, when you think about it.

Fortunately, astronomers are pushing forward to study how dark matter behaves as it’s scattered through the universe, and the results are interesting. We start with a very basic idea: that dark matter is cold and completely non-interacting, or at least has interactions (the strength with which dark matter particles scatter off of each other) that are too small to make any noticeable difference. This is a well-defined and predictive model: ΛCDM, which includes the cosmological constant (Λ) as well as the cold dark matter (CDM). We can compare astronomical observations to ΛCDM predictions to see if we’re on the right track.

At first blush, we are very much on the right track. Over and over again, new observations come in that match the predictions of ΛCDM. But there are still a few anomalies that bug us, especially on relatively small (galaxy-sized) scales.

One such anomaly is the “too big to fail” problem. The idea here is that we can use ΛCDM to make quantitative predictions concerning how many galaxies there should be with different masses. For example, the Milky Way is quite a big galaxy, and it has smaller satellites like the Magellanic Clouds. In ΛCDM we can predict how many such satellites there should be, and how massive they should be. For a long time we’ve known that the actual number of satellites we observe is quite a bit smaller than the number predicted — that’s the “missing satellites” problem. But this has a possible solution: we only observe satellite galaxies by seeing stars and gas in them, and maybe the halos of dark matter that would ordinarily support such galaxies get stripped of their stars and gas by interacting with the host galaxy. The too big to fail problem tries to sharpen the issue, by pointing out that some of the predicted galaxies are just so massive that there’s no way they could not have visible stars. Or, put another way: the Milky Way does have some satellites, as do other galaxies; but when we examine these smaller galaxies, they seem to have a lot less dark matter than the simulations would predict.

Still, any time you are concentrating on galaxies that are satellites of other galaxies, you rightly worry that complicated interactions between messy atoms and photons are getting in the way of the pristine elegance of the non-interacting dark matter. So we’d like to check that this purported problem exists even out “in the field,” with lonely galaxies far away from big monsters like the Milky Way.

A new paper claims that yes, there is a too-big-to-fail problem even for galaxies in the field.

Is there a “too big to fail” problem in the field?

Emmanouil Papastergis, Riccardo Giovanelli, Martha P. Haynes, Francesco Shankar

We use the Arecibo Legacy Fast ALFA (ALFALFA) 21cm survey to measure the number density of galaxies as a function of their rotational velocity, Vrot,HI (as inferred from the width of their 21cm emission line). Based on the measured velocity function we statistically connect galaxies with their host halos, via abundance matching. In a LCDM cosmology, low-velocity galaxies are expected to be hosted by halos that are significantly more massive than indicated by the measured galactic velocity; allowing lower mass halos to host ALFALFA galaxies would result in a vast overestimate of their number counts. We then seek observational verification of this predicted trend, by analyzing the kinematics of a literature sample of field dwarf galaxies. We find that galaxies with Vrot,HI<25 km/s are kinematically incompatible with their predicted LCDM host halos, in the sense that hosts are too massive to be accommodated within the measured galactic rotation curves. This issue is analogous to the "too big to fail" problem faced by the bright satellites of the Milky Way, but here it concerns extreme dwarf galaxies in the field. Consequently, solutions based on satellite-specific processes are not applicable in this context. Our result confirms the findings of previous studies based on optical survey data, and addresses a number of observational systematics present in these works. Furthermore, we point out the assumptions and uncertainties that could strongly affect our conclusions. We show that the two most important among them, namely baryonic effects on the abundances and rotation curves of halos, do not seem capable of resolving the reported discrepancy.

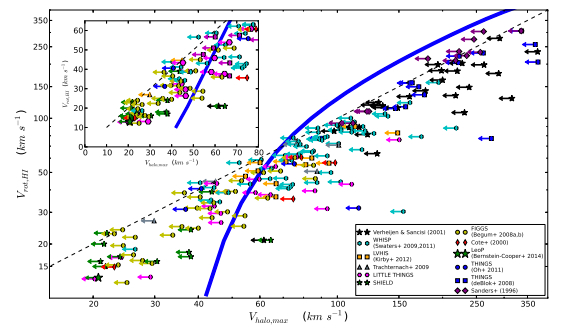

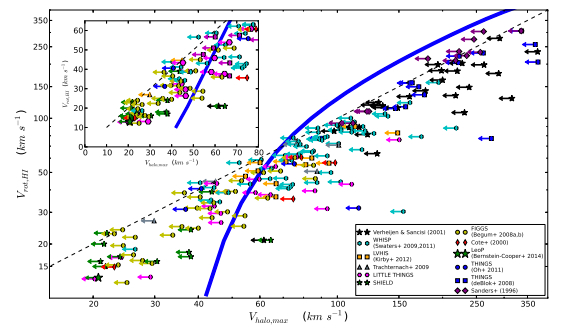

Here is the money plot from the paper:

The horizontal axis is the maximum circular velocity, basically telling us the mass of the halo; the vertical axis is the observed velocity of hydrogen in the galaxy. The blue line is the prediction from ΛCDM, while the dots are observed galaxies. Now, you might think that the blue line is just a very crappy fit to the data overall. But that’s okay; the points represent upper limits in the horizontal direction, so points that lie below/to the right of the curve are fine. It’s a statistical prediction: ΛCDM is predicting how many galaxies we have at each mass, even if we don’t think we can confidently measure the mass of each individual galaxy. What we see, however, is that there are a bunch of points in the bottom left corner that are above the line. ΛCDM predicts that even the smallest galaxies in this sample should still be relatively massive (have a lot of dark matter), but that’s not what we see.

If it holds up, this result is really intriguing. ΛCDM is a nice, simple starting point for a theory of dark matter, but it’s also kind of boring. From a physicist’s point of view, it would be much more fun if dark matter particles interacted noticeably with each other. We have plenty of ideas, including some of my favorites like dark photons and dark atoms. It is very tempting to think that observed deviations from the predictions of ΛCDM are due to some interesting new physics in the dark sector.

Which is why, of course, we should be especially skeptical. Always train your doubt most strongly on those ideas that you really want to be true. Fortunately there is plenty more to be done in terms of understanding the distribution of galaxies and dark matter, so this is a very solvable problem — and a great opportunity for learning something profound about most of the matter in the universe.