Thanksgiving

This year we give thanks for Arrow’s Impossibility Theorem. (We’ve previously given thanks for the Standard Model Lagrangian, Hubble’s Law, the Spin-Statistics Theorem, conservation of momentum, effective field theory, the error bar, gauge symmetry, Landauer’s Principle, the Fourier Transform, Riemannian Geometry, the speed of light, the Jarzynski equality, the moons of Jupiter, space, black hole entropy, and electromagnetism.)

Arrow’s Theorem is not a result in physics or mathematics, or even in physical science, but rather in social choice theory. To fans of social-choice theory and voting models, it is as central as conservation of momentum is to classical physics; if you’re not such a fan, you may never have even heard of it. But as you will see, there is something physics-y about it. Connections to my interests in the physics of democracy are left as an exercise for the reader.

Here is the setup. You have a set of voters {1, 2, 3, …} and a set of choices {A, B, C, …}. The choices may be candidates for office, but they may equally well be where a group of friends is going to meet for dinner; it doesn’t matter. Each voter has a ranking of the choices, from most favorite to least, so that for example voter 1 might rank D first, A second, C third, and so on. We will ignore the possibility of ties or indifference concerning certain choices, but they’re not hard to include. What we don’t include is any measure of intensity of feeling: we know that a certain voter prefers A to B and B to C, but we don’t know whether (for example) they could live with B but hate C with a burning passion. As Kenneth Arrow observed in his original 1950 paper, it’s hard to objectively compare intensity of feeling between different people.

The question is: how best to aggregate these individual preferences into a single group preference? Maybe there is one bully who just always gets their way. But alternatively, we could try to be democratic about it and have a vote. When there is more than one choice, however, voting becomes tricky.

This has been appreciated for a long time, for example in the Condorcet Paradox (1785). Consider three voters and three choices, coming out as in this table.

| Voter 1 | Voter 2 | Voter 3 |

| A | B | C |

| B | C | A |

| C | A | B |

Then simply posit that one choice is preferred to another if a majority of voters prefer it. The problem is immediate: more voters prefer A over B, and more voters prefer B over C, but more voters also prefer C over A. This violates the transitivity of preferences, which is a fundamental postulate of rational choice theory. Maybe we have to be more clever.

So, much like Euclid did a while back for geometry, Arrow set out to state some simple postulates we can all agree a good voting system should have, then figure out what kind of voting system would obey them. The postulates he settled on (as amended by later work) are:

- Nobody is a dictator. The system is not just “do what Voter 1 wants.”

- Independence of irrelevant alternatives. If the method says that A is preferred to B, adding in a new alternative C will not change the relative ranking between A and B.

- Pareto efficiency. If every voter prefers A over B, the group prefers A over B.

- Unrestricted domain. The method provides group preferences for any possible set of individual preferences.

These seem like pretty reasonable criteria! And the answer is: you can’t do it. Arrow’s Theorem proves that there is no ranked-choice voting method that satisfies all of these criteria. I’m not going to prove the theorem here, but the basic strategy is to find a subset of the voting population whose preferences are always satisfied, and then find a similar subset of that population, and keep going until you find a dictator.

It’s fun to go through different proposed voting systems and see how they fall short of Arrow’s conditions. Consider for example the Borda Count: give 1 point to a choice for every voter ranking it first, 2 points for second, and so on, finally crowning the choice with the least points as the winner. (Such a system is used in some political contexts, and frequently in handing out awards like the Heisman Trophy in college football.) Seems superficially reasonable, but this method violates the independence of irrelevant alternatives. Adding in a new option C that many voters put between A and B will increase the distance in points between A and B, possibly altering the outcome.

Arrow’s Theorem reflects a fundamental feature of democratic decision-making: the idea of aggregating individual preferences into a group preference is not at all straightforward. Consider the following set of preferences:

| Voter 1 | Voter 2 | Voter 3 | Voter 4 | Voter 5 |

| A | A | A | D | D |

| B | B | B | B | B |

| C | D | C | C | C |

| D | C | D | A | A |

Here a simple majority of voters have A as their first choice, and many common systems will spit out A as the winner. But note that the dissenters seem to really be against A, putting it dead last. And their favorite, D, is not that popular among A’s supporters. But B is ranked second by everyone. So perhaps one could make an argument that B should actually be the winner, as a consensus not-so-bad choice?

Perhaps! Methods like the Borda Count are intended to allow for just such a possibility. But it has it’s problems, as we’ve seen. Arrow’s Theorem assures us that all ranked-voting systems are going to have some kind of problems.

By far the most common voting system in the English-speaking world is plurality voting, or “first past the post.” There, only the first-place preferences count (you only get to vote for one choice), and whoever gets the largest number of votes wins. It is universally derided by experts as a terrible system! A small improvement is instant-runoff voting, sometimes just called “ranked choice,” although the latter designation implies something broader. There, we gather complete rankings, count up all the top choices, and declare a winner if someone has a majority. If not, we eliminate whoever got the fewest first-place votes, and run the procedure again. This is … slightly better, as it allows for people to vote their conscience a bit more easily. (You can vote for your beloved third-party candidate, knowing that your vote will be transferred to your second-favorite if they don’t do well.) But it’s still rife with problems.

One way to avoid Arrow’s result is to allow for people to express the intensity of their preferences after all, in what is called cardinal voting (or range voting, or score voting). This allows the voters to indicate that they love A, would grudgingly accept B, but would hate to see C. This slips outside Arrow’s assumptions, and allows us to construct a system that satisfies all of his criteria.

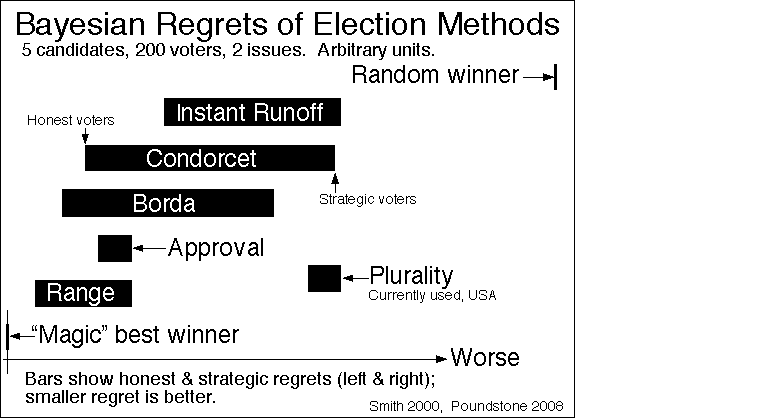

There is some evidence that cardinal voting leads to less “regret” among voters than other systems, for example as indicated in this numerical result from Warren Smith, where it is labeled “range voting” and left-to-right indicates best-to-worst among voting systems.

On the other hand — is it practical? Can you imagine elections with 100 candidates, and asking voters to give each of them a score from 0 to 100?

I honestly don’t know. Here in the US our voting procedures are already laughably primitive, in part because that primitivity serves the purposes of certain groups. I’m not that optimistic that we will reform the system to obtain a notably better result, but it’s still interesting to imagine how well we might potentially do.